Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

In the previous edition in the article Figuring out the Opponent's hand in Spades we detailed the heuristic inference system used in our Spades program that allows each player to gradually model opponent hands. This is used to provide realistic skewed deals for the MCTS (Monte-Carlo Tree Search) that more closely matches the actual game situation than any simple random deal could. This is used very effectively for simple inferences, such as player discards, where the inference is confined to a limited number of cards.

However this heuristic inference on bidding has less obvious viability even though its impact is substantial as it dictates all card probabilities before trick play. The simple re-distribution of probability could fall down, particularly if presented with more than one strong bid, as significant re-shifts in probability might occur. This fragility was exposed in one beta test report that showed an example where this relatively simple approach to bidding inference gave a relatively poor projection. Which triggered this second look at bidding inference.

To address the issue we have now created a new inference system, just for bidding, that uses real historical information to determine the probabilities of each player holding each card, linked to the bidding sequence. This provides a much more plausible basis for bidding inference and avoids some of the significant flaws of the old system. It sits alongside the heuristic system for trick play bidding.

Background

In the introduction we indicated that the inference system used can perform badly during bidding. The beta report example that exposed a significant defect also revealed a significant heuristic defect. If we could create a universal table that projected probabilities based on real historical distributions of probability, it would offer a hands-on chance to re-assess the whole idea of the viability of using heuristics for this at all.

The purpose here was then to create a bidding inference profile based on actual observed bidding. The following describes how this is constructed and then looks at a real bidding example.

Implementation

First we need to define what we need. We want to create a profile of card distributions for the possible range of bids. We will use this to project card distribution probabilities for each of the 4 players. However these distributions will not be linked to North, South, East and West bidders, but by 1st, 2nd, 3rd and 4th bidder. All that matters is the bid and the position of that bid in the order, not who made it.

To fulfil this needs an array to record actual bidding samples from real play, as follows:

const TInt KMaxbid =7 ;// largest bid value used for learning #define M (KMaxbid+1) // bid order // 1 2 3 4 hasnt pl cards // has TInt iHas [M][M][M][M] [2] [4][64]; // size 2,097,152

The last index here [64] is mapped for each possible card. This could be

[52] but [64] is a convenient mapping.

The previous index [4] is for each of the 4 players, ordered by 1st, 2nd,

3rd and 4th bidder. Each card has a separate count for each bidder.

The next previous index [2] will be the counts [0] for hands that do not contain

the selected card and counts [1] for hands that do.

The final first 4 indexes are for the bids in order that they appear, or for

example a bid sequence 1,3,4,3 would index iHas[1][3][4][3]. Note that the

size of this index is limited to bids between 0 and 7. Any bid of 8+ is mapped

to a bid of 7 on the grounds that bids of 8+ will be rare and also quite similar

to a bid of 7.

This array is 2097k words or 8 megabytes. This is updated from a testbed console command that runs a loop, dealing a new hand and then playing the 4 bids and then repeating. This takes a long time so the console loop periodically writes the array iHas to a file, using a random number name. This allows the same task to be run for several days on all 40 cores that we have available for Spades testing. When ended the console scoops up all the files to create one combined iHas[] array with a billion bid card counts.

Note from examining the above it is clear that the same type of architecture is not possible for trick play inferences, where the range of possibilities is huge and therefore there is no realistic way of sampling this nor representing any such inference table.

Additional Processing

For bid sequences of (say) 3,3,4,2 the count samples might be very high, but less frequently visited totals such as the unlikely bid 0,0,2,6 will inevitably have small samples. That will lead to choppy sequences of probabilities where a 6H might be 20% then 7H 85% etc. to accommodate that the low count totals are averaged with neighbour counts.

In addition some bid sequences might never actually occur, so the existing set is analysed and similar sets copied to fill some of the gaps. This still leaves the possibility of some sets never happening, but might arise during actual play. That is addressed later.

The Target Array for the Engine to Reference

The above table is unnecessarily big, using 2x 32 bit words per probability, but the engine reduces the probability to 8 bit for use, as follows:

TProb KInferProb4[M][M][M][M][4][64]; // final probs 1,048,576 after 4 bids TProb KInferProb3[M][M][M] [4][64]; // final probs 131 072 after 3 bids TProb KInferProb2[M][M] [4][64]; // final probs 16,384 after 2 bids TProb KInferProb1[M] [4][64]; // final probs 2,048 after 1 bid

This generates 4 tables rather than 1 as after (say) 2 bids we still need a probability table, but this will be the average of the last 2 indexes of KInferProb4[M][M][M][M]. The 3 extra tables are actually small, so a small cost.

Application in the Program

An issue raised above is that the table cannot guarantee to provide a probability table for the actual bidding sequence. This is accommodated by always applying the old heuristic system but then, as each bid is made, the program checks to see if a valid history table entry exists for the current bidding sequence and copies this table overriding the existing heuristic iProbability table. Note that each card has 16 probabilities. E.g. West has 4 probabilities for 4H and East also has 4 etc.

A Walkthrough of a particular Bid

Comparing the existing old carefully constructed heuristic bidding inference and new history-based bidding inference will allow the former to be measured for accuracy as the latter is based on real actual bids with particular distributions. This will expose where heuristics were not good enough.

We will look at one particular example of the bid sequence 4,0,4,3 as follows:

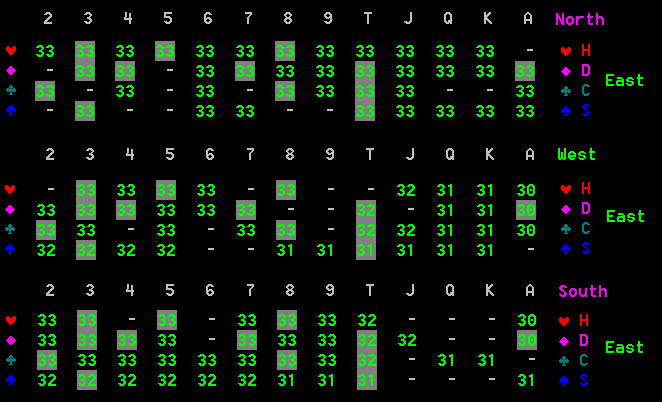

Figure 1 The deal and bidding

Here we see that North opens with a bid of 4, even though the trumps are all low and there is only one ace. Assuming no one goes nil, then North can lead with AH and then trump-on on hearts. However East actually went nil. A deep analysis of this hand shows that North would have been better to bid 2 or 3. With heuristic bidding inference North is 79% likely to bid 4 and with the new history bidding inference this reduces to 58%. The program is skewed to play aggressively.

We can compare the probabilities that this game generates with the old heuristic and new history-based inference tables. This is held in 16 tables of card distributions with 4 projections per player, but we will just look at each player's view of just East's hand, so only 25% of all these tables.

If we look at the situation after North's initial bid of 4 we have the following old heuristic inferences. Note that the backlit grey table entries indicate probabilities where the player does actually hold that card. If the inferences are good the values in the grey highlight squares should be high.

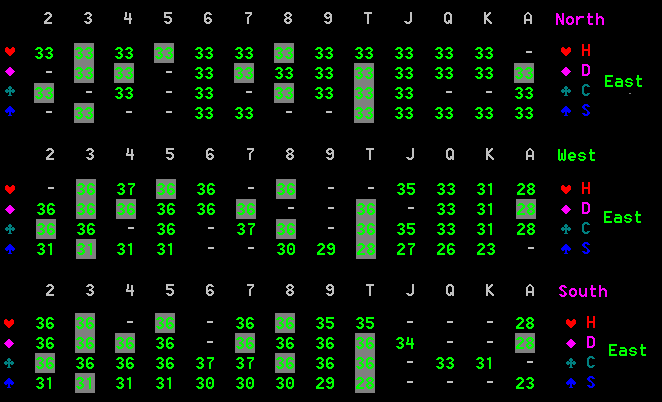

Figure 2 The heuristic-based projection that North, West and South have

of Easts cards after 1 bid

We also have the following new history-based inferences:

Figure 3 The history-based projection that North, West and South have of Easts cards after 1 bid

At this point we only have one bid, so very little to go on. However the heuristic table shows that North believes that East has an equal probability of holding any card. This sounds like an anomaly as North bid 4, so we should expect east to have a weaker hand. However North is not inferring from a bid of 4 but from what cards it knows it has. North in bidding 4 has no more information from its own bid.

If we look at the same table for the history inference we see the exact same distribution. However this is derived from inferring a generalised bit of 4 as the first bid and you might expect that this would result in an uneven spread of probabilities for East but again it is flat.

However West's and South's view of East shows a skew. The heuristic skew here is very marginal, expecting 31% and 30% chance of high cards instead of 33%, but no change for low cards. The history skew though is much more pronounced ranging over 23% to 37% instead of 30% to 33%.

Inferences after all bids have been made

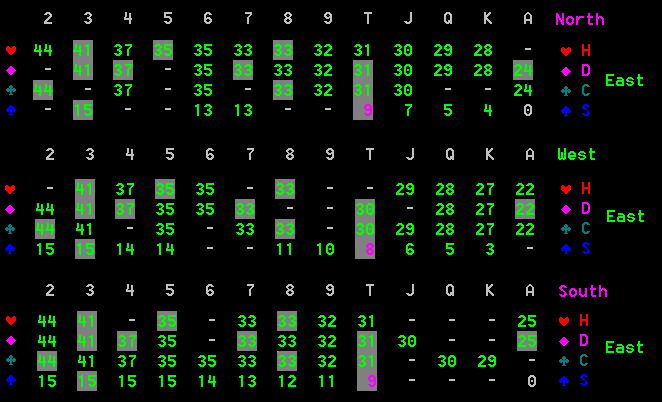

This is the distribution after all 4 bids for the heuristic inference:

Figure 4 The heuristic-based projection that North, West and South have of Easts cards after 4 bids

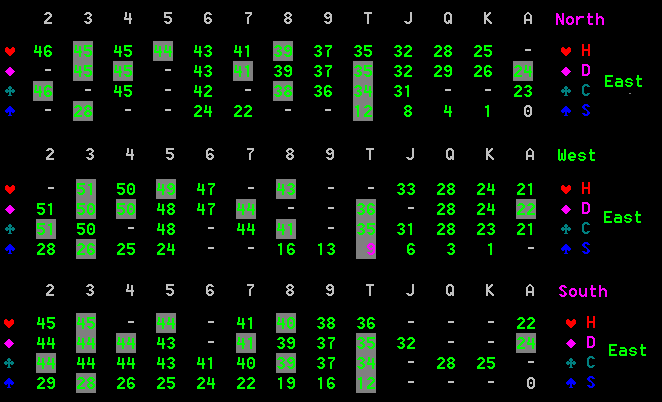

This is the distribution after all 4 bids for the history inference:

Figure 5 The history-based projection that North, West and South have of Easts cards after 4 bids

The most pronounced difference here is between the inferred probability for East (who bid nil) holding low trumps. The heuristic system expects a 15% chance of holding 2S compared to 28% or 29% for the history system. Note also that the heuristic system expects an only 1% chance of holding KS compared to 3% or 4% for the history system. These must expose real defects in the heuristic projection, which underestimates the chances of Nil player holding trumps.

Conclusions

The history based inference system must, in a sense, be "right". So from this we can determine where the heuristic inference was wrong and these can be corrected. At first glance this seems though to make the latter redundant as the history system must be right. Head to head the revised new system wins points 1.8% faster than the old system.

In terms of overall accuracy a fixed inference of 33% across all values would result in an average error of 44%. The heuristic system scores 43.3% and the history-based system 42.6%. At first glance this does not look impressive, but a perfect inference system would score little more as each player really has very little information to go on.

However this work is not complete as there are issues to address:

First the trials were based on self-play runs, so assume that the program makes good bids in the first place. It is then tested against the old heuristic system so we should expect it to do well as it has an accurate model of just the existing system's bidding because that was created using that same opponent. The next step is to model against strong human play, which will provide a better base for anticipating card inferences. This is designed for our game product. It could take this further and have a history inference table for strong opponents and a second table for weaker ones. It could then examine the human player's record and choose the appropriate table.

The other issue is that this is run for a single set of rules. If the method is trialled for all combinations of rules then this falls down as each table is a megabyte, and we might need thousands of tables. Instead the heuristic system could be used for all but the main default rule combination by running trials on some of these. This will then improve the existing heuristic system. Shifts made in tuning one set of rules will probably also have some applicability for other similar sets.

Net this particular work seemed relatively straightforward to try, but has proven really quite hard to evolve. As a practical direct end-product system it is fairly costly, but the input it provides gives meaningful insights into the existing heuristic system that otherwise was created by using (at best) intelligent guesswork with very little to actually validate its correctness.

This is one of the few instances where we have a touchstone which is very close to being definitively right, instead of relying instead on touchy-feely heuristics for which the only validation is performance against other versions using similar heuristic rules. This offers a re-assuring base from which to make more bold enhancements.

Jeff Rollason - September 2019