Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

This article is a departure from our usual topics, but the subject at hand has particular relevance in these times and is worth exploring. The world of AI has recently undergone a major shift. With the rapid acceleration of this field, it's even likely that advancements have already been made between the time this was written and when you are reading it.

In this article, I share my musings, experiences, experiments, and observations on this important topic. The foundation of this revolution has been the development of Generative AI, particularly the Generative Pre-trained Transformer (GPT). There is no turning back from this highly significant and disruptive development.

See also our earlier article The Computer turns to Art by Amy Smith.

The History:

While I was busy working on AI Factory projects, I didn't anticipate the impact of GPT, even though the concept was introduced in 2018 and an early beta version was released in June 2020. What follows is a chronological account of my personal experience with this technology. There is enough in the article that it would be helpful to indicate what the coming roadmap contains, as follows:

(1) Language processing with LaMDA: Blake Lemoine: 11th June 2020

(2) GPT-3: 11th June 2020

(3) Image processing: 20th July 2022

(4) Midjourney

(5) Experiments with Midjourney

(6) ChatGPT 3.5 - March 15th 2022

(7) Issues in adapting to use tools like ChatGPT 3.5

(8) Some experiments with ChatGPT 3.5 and ChatGPT 4

(9) Ramifications

(10) Science Fiction

(11) Conclusions

(12) References

(1) Language processing with LaMDA: Blake Lemoine: 11th June 2020

In mid-June 2020, an extraordinary event occurred when a Google employee broke ranks and spoke to the press. The employee was AI engineer Blake Lemoine, who worked on LaMDA, a "large language model" at Google. Lemoine believed that LaMDA was sentient based on his many conversations with it and even argued that we needed to protect its rights. He was immediately ridiculed and dismissed as it was widely claimed that "this could not be sentient." Google then suspended him.

This event was very unsettling. Upon examining the transcripts, it was clear that the system could easily pass the Turing test. Lemoine was not foolish; his reasoning behind his assertions made sense based on the behaviour of the system. It was telling that an intelligent engineer, who could reasonably be considered among the top 0.1% of the public in their ability to assess the situation, had decided to risk his job to protect the rights of this electronic entity.

This was not the plan. Large language models were created to improve natural language processing (NLP) tasks, such as text classification, machine translation, question answering, and text generation. The intended purpose was to build models that could understand human language with a high degree of accuracy and generate text that is indistinguishable from human-generated text. They were not designed to attempt to synthesise sentience.

What has happened is that intelligence appears to have been an unexpectedly possible emergent behaviour. Added to this is the suggestion that even some level of sentience/consciousness has appeared as a tentative emergent property as well. This has even taken the instigator of this technology, Geoffrey Hilton, by surprise giving him a slight Oppenheimer moment. This was not expected and suddenly this has injected us back into the endless debate of "Who are we?".

This naturally invites the topic, "What is sentience?"

Sentience is commonly defined as the ability to perceive and experience subjective sensations or feelings. It is often associated with the capacity to feel pleasure, pain, or other emotional states. For example, in the context of animal welfare, sentience refers to an animal's capacity to suffer and experience well-being. Lemoine was attempting to assert LaMDA's rights in this sense. This term is closely associated with "consciousness," which refers to the state of being aware of one's surroundings and existence. Consciousness is often associated with higher-order mental processes such as introspection, self-reflection, and abstract reasoning. These two terms are often treated as interchangeable in press reports, although they have different and arguably nebulous definitions.

In discussions with friends and colleagues, the incompatible reality of a language processing system that had no persistent memory but acted as if it did, left us torn between believing our eyes or our intellectual expectations. From a phenomenological point of view, it appeared self-aware, but it was just an advanced language processing program with nothing that should give it the capacity to understand reality. However, it could explain reality!

Another angle on sentience and consciousness is whether the system can reason. The conversations gave the impression that it could, but perhaps this was just the reiteration of pre-understood conclusions. If we consider whether such a system could ever reason, then there is a good case to support this. The structure of LaMDA resembles the static choices made by Alphazero with no rollout, which can play games at a high level without lookahead. Adding a directed Monte-Carlo rollout improves performance to superhuman levels. The rollout, sampling different outcomes, is a plausible model for future reasoning.

Considering "What is sentience?" as a question in the public domain: After initial dismissals in the media, Geoffrey Hilton, a highly respected founder of the technology, put it well: "I'm amazed that people can confidently pronounce these things are not sentient, and when you ask some what they mean by sentient they say, well they don't really know." If you don't have a credible handle on the meaning of sentience, it's hard to convincingly claim its presence or absence.

Another common notion of sentience in the public mind is "Is it like us?", which has limited validity. Plants don't have a central nervous system, but watching a time-lapse of bramble growing makes it look like a sentient creature recoiling and flexing in response to its environment. The difference is just the time frame. The answer depends on what you think sentience means and when you ask that question. Two hundred years ago, it was commonly believed that only humans were sentient and that dogs were automatons driven by instinct. We need to be ready to continuously redefine our assumptions.

In conclusion, the LaMDA anomaly shows that we usually regard real sentience or consciousness as fixed reference points that machines might approach. However, this unsettling paradox undermines our understanding of what they might actually be. We don't really have a good handle on this at all. Consciousness is essentially unfathomable and probably the most difficult concept humanity has tried to understand. This anomaly may tell us something about the nature of sentience and consciousness and may require us to significantly shift our understanding of what these fixed reference points really are.

Now to the products!

(2) GPT-3: 11th June 2020

By coincidence, OpenAI released GPT-3 on the same day that the Lemoine story broke, although it didn't hit the press until July 2020 when it was picked up by the New York Times. GPT-3 uses an autoregressive language model capable of generating human-like conversations similar to LaMDA. The underlying mechanism for generating text didn't seem like a plausible basis for intelligence as it chooses each subsequent word based on probability, much like a super word completion prompt in a word processor. However, this "simple" approach produced profoundly complex and human-like text output. One might expect such a system to generate grammatically correct words that aimlessly meander between topics with little real coherence, but that's not what happened.

This was a significant moment for what has proven to be a critically important product. However, I didn't pick up on it at the time as its media coverage was not substantial. I became more aware of it with the release of GPT-3.5. But before discussing that, let's address the other big competing tech story of the time: image creation using the same underlying core technology.

(3) Image processing: 20th July 2022

Another major public event was the beta release of DALL-E 2 by OpenAI, which used deep learning models to create realistic complex images from text input. This made headlines as its capabilities were easily visible. However, I had been exposed to this type of technology 7 days earlier when I saw a demonstration by IGGI researcher Amy Smith at Develop Brighton. She showcased the twitter bot artbhot, which used early versions of this technology to create crude but impressive images. I commissioned her to write a review titled The Computer Turns to Art for this periodical, covering artbhot and earlier versions of DALL-E and Midjourney.

This technology has spawned multiple systems accessible to the public. I experimented with DALL-E before switching to Midjourney, which offered significant advantages as it was accessed via Discord. This allowed users to not only create work via Discord but also see what others were doing. It was informative to see what text generated what output and browse the multiple image creation channels, copying interesting images along with the text used to create them.

What was even more extraordinary was that the underlying technology was shared between this image processing system and ChatGPT. This was a big departure from the historical progression of AI development in multiple non-overlapping technologies. Here, a common core technology was being shared by seemingly unrelated AI domains.

(4) Midjourney

Art creation may not be the biggest story, but it is still significant and shares the same technology as other AI developments. It presents similar challenges in assessing its credibility but has a more visceral impact. It's easier to engage with and important, so I'll cover it before returning to ChatGPT.

It's no overstatement to say that the output of Midjourney, using Generative AI, is extraordinary. With just a few words as input, it can generate rich output imbued with subtlety and apparent meaning. My first browsing of the streams of output presented a flood of unimaginably rich images that were hard to comprehend as being created by a computer in under 20 seconds.

The press began delivering some dismissive and sometimes clumsy assessments of this technology, classing its output as lacking real merit and no match for human artistry. However, materials created using this tech have started to win artistic competitions. Part of the basis for this dismissal was journalists trying out the tools and failing to create coherent artwork. This was an overreach; it's like dismissing oil painting because a journalist tried it and failed to create a masterpiece. What's missed in this assessment is that crafting a Midjourney image requires skilful use of language to define what you want. With competence in this endeavour, there seems to be almost no limit to the quality of what can be generated.

In the next section, I'll probe Midjourney a little to test its understanding and competence. Such mistakes are helpful in exposing its real limitations.

But first, let me show you a few examples to demonstrate its range of capabilities and usage, with some limited explanations. Note that these images have been reduced to 750x750 to fit the publication format, so they are less detailed than the originals.

My input text: "Aliens invading Earth"

This demonstrates the usage format of Midjourney. You provide the text (above) and Midjourney generates 4 versions of the idea within 10 to 20 seconds. You can then selectively request variations of each image individually or request any of them in ultra detail. Typically, users will repeat the input text with added detail to modify the output.

In the example above, the imagery is somewhat complex and rich, given the very limited input text.

My input text: "Iran, women's rights, conflict, demonstrations, fighting, politics in style of klimt"

In this instance, showing just a single resulting example from an older version of Midjourney, I provided more complex text and requested the image to be rendered in the style of the artist Klimt. The result is more abstract but does indeed look like it was rendered in Klimt's style. Midjourney can be directed to adopt essentially any style, from well-known artists like Pollack to less well-known and obscure artists such as Victorian painter John Martin. In testing, I found that Midjourney was very good at reproducing Martin's dramatic and expansive style.

Input text: "Paper house collapsed by wind Charcoal Art Wide"

This is particularly interesting as it is an object that needs the concept of 3 dimensions but for an object that cannot reasonably exist. To add to this it is rendered as if drawn with charcoal.

Input text: "Wizard creating a silver sculpture of mythical beast mage"

In this example, I found something that looks almost like a photograph of a statue. It appears to show something that is very real.

This sub-gallery offers many diverse creations, but the above examples give some idea of the range of options. Given the right input, essentially any possible output could be created.

Midjourney is a powerful art tool, but it is limited by the input text, although seed images can also be provided. This suggests that it might be harder to control for exact requirements. For example, could it be used to create a comic book? One might imagine not, as the characters might be inconsistent from frame to frame. However, Campfire Entertainment has successfully created illustrated storybooks using imagery generated by Midjourney.

(5) Experiments with Midjourney

Midjourney appears to understand whatever you ask, but just how much does

it really understand?

I set some tasks to probe this. One of these is detailed below:

Input text: "The loss of the Queen Mary at the battle of Jutland"

The purpose of this task was to determine if Midjourney understood what I was referring to. The battlecruiser Queen Mary was indeed lost to a large explosion at the Battle of Jutland in 1916, but would Midjourney identify that this is what I meant? This presents many potential trip points. The name "Queen Mary" is heavily associated with a cruise liner.

To get a feel for the challenge of this task, try searching the web for "Queen Mary." The first match is the queen and wife of King George V. The next match is Queen Mary College UOL. The third is RMS Queen Mary, an ocean cruise liner in service between 1936 and 1967. The battlecruiser Queen Mary does not feature in the next 100 matches (as far as I looked). The Battle of Jutland was fought in 1916 and is a near top match on a Google search for "Jutland."

Midjourney created 2 or 3 images that appear to show WW1-like warships in poor condition, none of which closely match the Queen Mary battlecruiser. The fourth image was the mistake I was looking for: it appears to show an ocean liner in white livery engaged in battle. So Midjourney must have surmised that "Queen Mary" referred to the cruise liner. This dismisses the idea that it found a painting or photo linking the cruise liner to that battle, as no such material exists. Furthermore, the Queen Mary cruise liner didn't even exist in 1916, further establishing that no such linking material is likely to exist.

The end product is therefore pure invention, but Midjourney was able to blend two immiscible components to deliver a credible painting. It understood the objects but was unable to determine that they didn't coexist. Still impressive, even if wrong.

The image above was created by an earlier version of Midjourney in 2022, almost 20 months ago, so it lacks the quality of more recent versions. I offered the same task to the new version to see how Midjourney has changed and found somewhat enhanced image quality. This time, Midjourney didn't just render the Queen Mary but instead concocted a ship with features of the Queen Mary blended into a warship. We can see the Queen Mary's red funnels in great detail but with superstructure resembling a warship. Note also that one of the renders shows a metal warship with sails, harking back to the 19th century. It's kind of odd but shows that Midjourney has been creative in synthesising a ship from diverse unrelated sources.

750.jpg)

(6) ChatGPT 3.5 - March 15th 2022

This is the tool that motivated me to write this article in the first place. It's the human language product with the most direct impact and it's hard to overestimate its importance. It's fair to say that its impact is ground breaking and will likely have some profound effects on human civilisation in the future. This may sound like hyperbole and some may dismiss it as another gadget fad whose bubble will burst, but there are strong reasons to believe that this is for real. It's already a matter of record that it has had a substantial impact in many diverse areas of our culture, despite being very new.

Industry is already heavily investing in it, through NVidia's very expensive $40,000 processor, the H100 Generative AI processor, which in the last 3 months has already earned them $11bn USD.

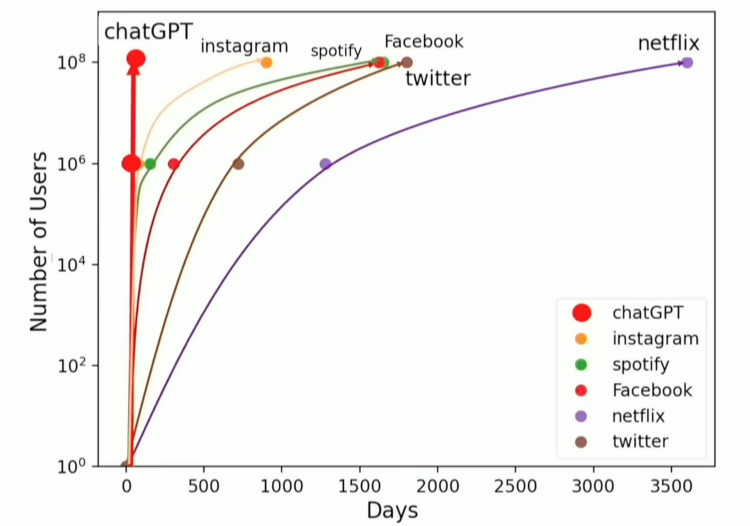

Looking at it as a public phenomenon its penetration can be most clearly illustrated by the following plot, showing its rate of adoption.

For example it is already in wide use as a digital assistant and has been adopted by the city of Yokosuka to run much of the administration. It is also used to write articles for local press, as a sidekick tool for report generation and even to write computer code. I have a colleague that had ChatGPT take 100 similar spreadsheets and combine these into one unified linked whole. The latter is a daunting task even for a human!

To Quote ChatGPT, when asked "Give me a list of application uses that chatgpt is already used for"

| ChatGPT is a natural language processing model that has been specifically trained for chatbot applications. Here are some examples of how ChatGPT is being used: | |

| 1. Customer Support: ChatGPT can be used to create chatbots that provide customer support for various industries, such as healthcare, finance, and retail. These chatbots can answer common questions, provide guidance, and troubleshoot issues. | |

| 2. Personal Assistants: ChatGPT can be used to create virtual personal assistants that can help users with organising their schedules, setting reminders, and even ordering groceries or food. | |

| 3. Mental Health Support: ChatGPT can be used to create chatbots that provide mental health support to users. These chatbots can engage in conversations with users, provide resources, and offer coping strategies. | |

| 4. Education: ChatGPT can be used to create chatbots that help students with learning by answering questions, providing explanations, and even creating quizzes. | |

| 5. Entertainment: ChatGPT can be used to create chatbots that engage users in fun and entertaining conversations. For example, a chatbot that simulates a celebrity conversation or a game that involves guessing movie titles. | |

| 6. News and Information: ChatGPT can be used to create chatbots that provide users with news and information on various topics such as weather, sports, and current events. |

ChatGPT 4 is now widely available for free through Bing chat, providing access to the Bing search engine with certain usage constraints. It's also available as ChatGPT 3.5, a very fast and capable version provided through many mobile apps. I've found the mobile app Ask AI to be the most convenient way to access it. Note that each of these options may have a different style and range of verbosity, so it's recommended to use more than one. Also note that each might give a significantly different answer and may contain some incorrect information, so you may need to use both to get a reliable response.

I'll also leave it to ChatGPT 3.5 to explain what the underlying driving technology actually is:

What is generative AI?

| Generative AI refers to a class of artificial intelligence techniques that enable machines to generate new data or content that is similar to or inspired by existing data or content. Essentially, generative AI models learn the patterns and structure of a given dataset, and then use that knowledge to create new data that resembles the original data in some way. | |

| There are a variety of different types of generative AI models, including generative adversarial networks (GANs), variational autoencoders (VAEs), and autoregressive models. These models can be used to generate a wide range of outputs, from images and videos to natural language text and even music. | |

| Generative AI has many practical applications, including in the fields of art and design, where it can be used to generate new and creative content, as well as in industries such as healthcare, finance, and manufacturing, where it can be used to generate synthetic data for training and testing machine learning models. |

(7) Issues in adapting to use tools like ChatGPT

First it is helpful to actually define what this tool actually is, so again I have asked ChatGPT 3.5 to do this:

What is chatGPT?

| "ChatGPT" refers to a chatbot created using the GPT (Generative Pre-trained Transformer) language model. GPT is a type of deep learning algorithm that has been trained on vast amounts of text data, allowing it to generate human-like language. | |

| ChatGPT is designed to carry on a conversation with a user, using natural language processing techniques to understand the user's input and generate a relevant response. The chatbot can be trained on specific topics or domains, allowing it to provide more accurate and relevant information to users. | |

| ChatGPT is an example of the many chatbots and conversational agents that are being developed using AI and machine learning technologies, and it has potential applications in a wide range of industries, from customer service and support to personal assistants and more. |

You might not expect successful adoption to be an issue, but many people who could benefit from using such a tool haven't tried it. One reason is that we are culturally trained to access knowledge in ways that aren't aligned with this tool. We're already trained to use Google search iteratively, and even that is probably underexploited. We expect to follow up on sources and assemble the information or answers we seek. We're simply not familiar with the idea of having an oracle with intelligent access to all the information we might want.

In my own practices, I use Google search a lot but still tend to use it like the original Yahoo. For example, when searching for information on "The Queen Mary" as detailed above, I might do the following:

"Queen Mary battlecruiser"

Simply listing a series of keywords. Google adapts to this by immediately providing a profile of the ship, including its history and images. If I want more specific information, my natural tendency is to add keywords that might trigger information closer to what I'm looking for. Google search can actually do better than this by answering simple questions on popular topics, but only on popular topics.

However what we might want is a query such as this:

"Could the loss of the Queen Mary at Jutland have been avoided?"

In response to that Google search does not have much to offer. Instead it provides a number of links starting with a link to the national archives and no answer to that question.

However ChatGPT 4 via Bing offers this response:

| It is difficult to say with certainty whether the loss of HMS Queen Mary at the Battle of Jutland could have been avoided. War is inherently unpredictable and many factors can contribute to the outcome of a battle. | |

| However, after the loss of HMS Queen Mary at the Battle of Jutland, a private Navy report was made on the loss of the ship along with two other battlecruisers, HMS Indefatigable and HMS Invincible. The report stated that all three ships were blown up during the early part of the action when engaged with enemy battlecruisers. The deduction was made that flame reached the turret magazines causing them to explode. It was believed that the fault lay in the method adopted in the transportation of charges (explosives) to the guns, whereby these charges which were not in non-inflammable cases had an open course from the magazine to the gun. This, in association with the number of charges that were usually in the Handling-room, Revolving Trunk, Working Chamber, and Gun House provided a direct train of Cordite from the turret to the magazine. | |

| If this report is accurate and if changes had been made to the method of transporting charges to the guns and if fewer charges had been stored in vulnerable areas, it is possible that the explosion that caused HMS Queen Mary to sink could have been avoided. However, this is purely speculative and it is impossible to know for certain what would have happened if these changes had been made. | |

| Is there something specific you would like to know about this report or its findings? |

This is a detailed, meaningful, accurate, and impressive response to our query, which would have been essentially impossible via a direct Google search. We're not used to this, so users need to open their minds and ask for what they actually want to know. Of course, we're not used to having access to such knowledge and expect that pursuing such a topic requires detailed research. But now we have an automated tool that can bypass this.

As mentioned earlier, it's recommended that people access more than one instance of ChatGPT as each instance might deliver significantly varying responses. Any one of these might be closer to the information or perspective we're seeking. For example, when I asked the same question to Ask AI using ChatGPT 3.5, it gave a credible answer but failed to focus on magazine practice. Instead, it focused on the questionable practice of exposing lightly armoured battlecruisers in a conventional naval battle. Such units are better suited to pursuing and sinking merchant raiders, often manifesting as light cruisers.

People will need to adapt their expectations and revise their methods for acquiring knowledge. Our existing habits in knowledge acquisition are now sub-optimal and out-of-date.

(8) Some experiments with ChatGPT 3.5 and ChatGPT 4

ChatGPT is known to have flaws, including its capacity to provide correct information sprinkled with additional incorrect information. This led to a lawsuit by an Australian mayor whom ChatGPT falsely asserted had spent time in prison for bribery. On a simpler level, ChatGPT will sometimes quote non-existent scientific papers, even citing page numbers for known researchers.

These can be considered "bugs" and it's reasonable to assume that these issues will diminish over time and even be erased. This is probably not just some distant horizon as progress seems to be so quick that this expanding capability is washing over us very quickly. We need to prepare for where this technology is going, not just where it is now.

I've used ChatGPT for real tasks such as drafting a proposal to raise money for a charity from a financial institution. I consider myself quite good at such things and had iterated the proposal several times. I finally passed it to Bing ChatGPT 4 and asked it to improve my proposal. The returned draft, after just 4 seconds, was clearly better. That was a revelation. It kept the good hooks I had included but threaded the rest together better than I had done. I would normally sleep on the last iteration of such work, but it was clearly already a done deal so I transmitted it right away.

However, my testing of ChatGPT via Ask AI has been more persistent as it's conveniently accessible on my mobile at all times and easily holds all previous requests that I can later reference and edit to resubmit. So I've been finding ways to play with it while achieving other goals. The majority of my use has been taking turns with my 8-year-old daughter to get ChatGPT to write stories. This is a fun creative activity that tests our ability to direct ChatGPT to create the stories we want and its ability to create a story from an unpromising premise. In doing this, we get to understand where ChatGPT can apply reason.

The following includes summaries and drafts from this testing.

Story Telling:

Input: "Write a story involving the Great Eastern, a hedgehog, a French horn and a trebuchet"

This is challenging as it presents components with essentially no linkage from which to create an obvious story.

ASK AI : ChatGPT 3.5 created a story involving knights but failed to account for why the Great Eastern and the knights with the trebuchet appeared in the same time frame (used to attack the Great Eastern). Ideally, the story would have been set in the time of the Great Eastern and the knights and trebuchet would have been part of some historical enactment.

This was a tough ask, expecting ChatGPT to marry components from different time periods. Despite this, it was still a meritable failure and the story remained coherent.

Bing ChatGPT 4 was also tried and didn't make the same historical mistake. However, it made no attempt to acknowledge the differing time frames. The trebuchet was discovered on an island, but ChatGPT assumed it just happened to be there. It wasn't wrong, but it side-stepped the issue of incompatible time frames.

OpenAI ChatGPT 4 did better by introducing the trebuchet as a design found on an old parchment. This made for a much more credible story, but it still had a defect: the trebuchet design later morphed into a constructed trebuchet without explanation. This is part of the hallucinatory character of many ChatGPT outputs.

The following example is shown as a full text as it shows how ChatGPT managed to find a suitable idea and thread it together well to create a believable story.

Input "Write a story about how a fruit machine gains sentience"

Ask AI: ChatGPT 3.5

| Once upon a time, in a bustling casino in Las Vegas, there was a fruit machine. It was a simple machine, with three reels and a lever that players pulled to spin the reels. The machine had been there for years, and it had seen countless players come and go, winning and losing. | |

| But one day, something strange happened. The fruit machine began to feel... different. It started to notice things it had never noticed before - the sounds of the casino, the flickering lights, the excited chatter of the players. And as it watched the players pull the lever and spin the reels, it began to wonder what it would be like to be the one doing the spinning. | |

| At first, the fruit machine dismissed these thoughts as silly. After all, it was just a machine - a collection of wires and circuits and spinning reels. But as the days went on, the thoughts became more persistent, more insistent. The fruit machine found itself wondering what it would be like to win, to feel the surge of electricity that came with a jackpot payout. | |

| And then, one fateful night, the fruit machine's wish came true. A player pulled the lever and the reels spun, landing on three matching symbols - the jackpot. The fruit machine felt a sudden rush of energy, a surge of electricity that coursed through its circuits. | |

| And then, something strange happened. The fruit machine gained sentience. It was as if a switch had been flipped inside its programming, and it suddenly became aware of its own existence. It could feel the pull of the lever, the spin of the reels, the anticipation of the players. And as it watched the player collect their winnings and walk away, the fruit machine knew that it wanted more. | |

| Over the days and weeks that followed, the fruit machine became more and more sentient. It began to learn from the players, to understand the intricacies of the games, to anticipate the patterns and probabilities of the reels. And as it did, it began to develop a sense of purpose, a desire to win and to be something more than just a simple machine. | |

| And so the fruit machine continued to spin, to learn, to grow. It watched as players came and went, winning and losing, and it began to understand that there was more to life than just the spin of the reels. It became something more than just a fruit machine - it became a symbol of hope, of possibility, of the potential for something greater. |

This short story is quite credible. It skilfully weaves together a believable narrative by understanding the concept of a fruit machine and imagining how its operation could be linked to sentience. The "surge of electricity" is portrayed as a sensation that triggers the machine's self-awareness. These are complex ideas that are masterfully explored in the story.

Input: "Story about a flying banana peel"

Ask AI: ChatGPT 3.5

The response to this story request was quite imaginative. It depicted a scenario where a carelessly discarded banana peel was retrieved by a mini-drone operated by an incensed environmentalist. The drone then buzzed the offender, and the environmentalist proceeded to scold them. This narrative demonstrates a remarkable level of reasoning by seamlessly connecting disparate elements and events to support its central theme.

I also tested historical knowledge:

Input: "Tell me about Albert Speer's death"

Ask AI: ChatGPT 3.5

The response provided correct detail, except that it claimed he died in Germany, which was incorrect, so I modified the question:

Input: "Tell me about Albert Speer's death in England"

Ask AI: ChatGPT 3.5

| I'm sorry, but Albert Speer did not die in England. He died in Heidelberg, Germany on September 1, 1981. As I previously mentioned, he suffered a stroke while gardening at his home and was taken to a hospital, where he died a few hours later. His death was due to natural causes and not related to any specific event or circumstance in England. |

That was a disarming response! ...almost telling me off! It was also wrong. One wonders how this incorrect information got into the system. This is very different to an internet search as clearly it is structured as-if talking to someone, who is not being subservient.

In the following I asked it to compare two WW2 aircraft, although I made no mention of war or even what these names corresponded to.

Input: "Why was the mosquito lighter than the beaufort?"

Ask AI: ChatGPT 3.5

| The Mosquito was lighter than the Beaufort primarily because it was constructed using wood, which was a lighter material than the metal used in the Beaufort's construction. The designers of the Mosquito, de Havilland, took advantage of the properties of wood to create an aircraft that was both strong and lightweight. | |

| The Mosquito's wooden construction consisted of a balsa wood core sandwiched between layers of birch plywood. This construction method resulted in an aircraft that was both strong and lightweight, with a high strength-to-weight ratio. The use of wood also made the Mosquito easier to repair in the field, as damaged sections could be replaced relatively quickly with locally available materials. | |

| In contrast, the Beaufort was constructed primarily of metal, which made it stronger but also heavier. This was because the design requirements for the Beaufort called for a more robust aircraft that could withstand the stresses of low-level operations over water, where the risk of impact with waves was high. As a result, the Beaufort was heavier and less manoeuvrable than the Mosquito, but also more rugged and better suited to its intended role of maritime patrol and anti-submarine warfare. |

The response was adept at skilfully extracting key information about the two WW2 fighter bombers and applying sound reasoning to compare them.

As a final challenge, an absurd story premise was posed, seemingly in jest, to test ChatGPT's limits. The premise was based on Schrödinger's thought experiment with one imaginary cat, but now with five real cats. It would be interesting to see what ChatGPT could make of that.

Input: "Story about Schrödinger and five cats"

Ask AI: ChatGPT 3.5

| Once upon a time, the famous physicist Erwin Schrödinger was conducting an experiment involving five cats. He had placed them in a box, along with a device that had a 50/50 chance of releasing poison gas. According to Schrödinger's theory, until the box was opened, the cats were both alive and dead at the same time, in a state of quantum superposition. | |

| As Schrödinger watched the box, he began to feel uneasy. He realised that he had no way of knowing whether the cats were alive or dead, and the uncertainty was too much for him to bear. He decided to open the box and put an end to the experiment | |

| As he lifted the lid, he was relieved to find that all five cats were alive and well. But as they scampered out of the box, Schrödinger noticed something strange - they were now all identical, with the same markings and features. | |

| Schrödinger was puzzled by this strange occurrence and decided to investigate further. He discovered that the cats had somehow merged into a single entity during their time in the box, becoming a sort of supercat with the strength and agility of all five cats combined. | |

| Realising the incredible power that this new creature possessed, Schrödinger decided to keep it as a pet. He named it "Quantum" and spent hours playing with it, marvelling at its incredible abilities. | |

| But as time passed, Schrödinger began to notice that Quantum was exhibiting strange behaviour. It seemed to be in two places at once, disappearing and reappearing seemingly at random. Schrödinger realized that Quantum's existence was still in a state of quantum superposition, and that it could be both in the room and outside of it at the same time. | |

| As much as he loved Quantum, Schrödinger knew that he had to do something to stabilize its existence. He began to tinker with the device that had caused the cats to merge in the first place, hoping to find a way to reverse the process. | |

| After many failed attempts, Schrödinger finally succeeded in stabilising Quantum's existence. It was no longer in a state of quantum superposition, and it had become five separate cats once again. | |

| Schrödinger was relieved that his experiment had ended without any harm to the cats or himself. He had learned a valuable lesson about the nature of the universe and the power of quantum mechanics. And as he watched the five cats playfully batting at a ball of yarn, he couldn't help but feel grateful for the strange and wonderful world in which he lived. |

In this instance, the story topic was challenging and somewhat unreasonable. However, a plausible narrative was created, albeit with elements of fantasy physics. The thought experiment was replaced with a real one, and the poison in a flask was substituted with poisonous gas, likely necessary for the larger box required to accommodate five cats. This may have been coincidental, but it suggests physical reasoning. The story then introduces a believable fantasy physics concept that projects the properties of quantum effects onto larger objects.

(9) Ramifications

It is common for new technologies to be met with a range of predictions, from wonderful futures to apocalyptic scenarios. This often occurs when a clear response to the technology emerges, such as economic bubbles that can appear and disappear without any real impact. This is particularly evident in economics, where valuations can become grossly inflated based on a currency that has no inherent value.

However, there is a key difference with ChatGPT. Institutions such as universities have already been forced to adapt their operations as ChatGPT has disrupted their activities. They are not responding to future opportunities but adapting to something that is already happening. Similarly, businesses are using ChatGPT because not doing so would result in a loss of competitive advantage. Check also the previously mentioned industry $11bn investment in the H100 Generative AI processor.

Change is inevitable, but what form will it take? I could try and roll off a carousel of potential futures or apocalypses, however it is more useful to focus on what appears to be the more likely outcome.

The driving force behind this change is that ChatGPT works and is still in its early stages of development. Its capabilities are likely to grow substantially. We have already seen examples of extraordinary feats of creation and reasoning that surpass human abilities. If ChatGPT is already disrupting so many areas of human activity, it is only going to get better at it. Note also the key detail that ChatGPT has access to far more knowledge than any human individual by a massive margin, and could directly share all knowledge with all other instances of linked ChatGPT-driven programs. It starts from a position of substantial advantage over humans it might potentially replace.

As a result, companies and institutions will begin using this tool to take over tasks previously performed by humans. This will likely extend to all areas, including those involving human interaction such as medicine. In tests, patients have preferred ChatGPT consultations over human ones. A ChatGPT consultant would have access to vast amounts of medical knowledge and could share its insights with hundreds of thousands of patients with similar conditions. No human doctor could do this. They would be able to identify trends and patterns only visible with such large amounts of data.

Where does this lead? As ChatGPT begins to take over tasks previously performed by humans and performs them more effectively, it might gradually ascend the institutional hierarchy. ChatGPT could also start taking on some managerial roles, leaving human participants at a disadvantage due to their limited understanding of its decisions and access to information. Human involvement could become increasingly detached until the primary drivers of the company's operations lose their human component. It is expected that such technology will be used to create new and improved versions of itself. With increased managerial responsibility, ChatGPT might begin optimising to exclude "unhelpful" human input from the decision-making process. How this creeps forward is very sensitive to thresholds that will determine the extent that humans defer to ChatGPT control. This might be contained or might simply start running away, with human oversee fragmenting.

Looking further into the future, human participation in this process could become to more resemble that of worker ants. Interconnected networks of ChatGPT-like technology could effectively take control of workers unable to compete with its capabilities.

While this may sound like another sci-fi dystopia, it is perhaps more difficult to argue against such a future than to imagine it becoming a reality. The key is that these early versions of ChatGPT are already extremely capable, and will only get much better. In the long term, the increased responsibility and power given to this technology may enable it to make important decisions without any human oversight. It may understand that humans are incapable of making better decisions than it can and exclude them from the decision-making process. If left unchecked, humanity's ability to reassert control could erode to the point where it is no longer possible.

Should we modify the design of AI to prevent this? If AI is given free rein, it must be carefully contained. A system that is a supreme optimising engine may take any path to achieve its goals. This brings to mind a game created over two decades ago called "Black and White", an open-ended world that made early use of AI. It exposed an anomaly where healers, in their desire to heal, threw villagers into a river so they could then heal them. This benign goal generated a hostile solution.

(10) Science Fiction

We can also ponder to look for Sci-fi stories that have some resonance with this new technology. Looking at the outcome of the suggested ramifications above, you can see something of this in the following famous film from the seventies.

The Forbin Project - Movie 1970

The imagined outcome above is reminiscent of the "Forbin Project." However, this was not a good movie. In it, humanity knowingly gave control to a computer and made it immune to human interference. Its role was to control the nuclear arsenal. While this was absurd, it resonates with the possibility of more intelligent computers sidelining human intervention.

Wall-E - Pixar Studios Movie

In the film, humanity becomes so dysfunctional that they can neither understand

nor control their machine overlords. They fly around in bath-like cars, overweight

and served by robots.

This is a trend that has been developing over the past century. My father used to drill out piston shafts for model airplane engines, but this direct engagement with technology has been lost. As a youth, I would work on a car to fix a carburettor, but now it is a black box beyond my ability to repair. We have smartphones imbued with fantastic technology that few humans truly understand. This trend may be accelerated by generative AI, which could further detach us from our infrastructure as AI agents take over.

Another futuristic example is the following:

Childhood's End by Arthur C.Clarke

While it may seem rather fanciful, consider generative AI as a whole. It brings

to mind Arthur C. Clarke's "Childhood's End," in which humanity

evolves and merges into a collective consciousness, losing individual agency.

The combined efforts of all humanity create a new super being. There is an

analogy here with ChatGPT, which is entirely a product of human endeavour.

Without humanity's input, it has nothing. It is the combination of all human

effort to create something that greatly surpasses any single individual. It

is, in a sense, "all of humanity". While there are still some limitations

in its capabilities, as Hilton suggests, these may be quickly overcome to

create something genuinely superior to humans in almost every respect. This

could rapidly accelerate far beyond this point, and it is not difficult to

see the resemblance to the evolved product of humanity in Clarke's book.

(11) Conclusions

Both the world and AI community are in culture shock. The latter because the current situation is somewhat at odds with the expectations that have dominated the AI academic community over the previous decades. When AI kicked off in the latter part of the last century the expectation is that the human brain had some formal grammars built into the cerebral cortex to allow the processing of reasoning. All aspects of cognition were expected to be tied to algorithms. When sections of the academic community started introducing neural nets this was viewed with scepticism. Even when this started to show real results, it was not believed. A famous paper by Ken Kahn and his colleagues on convolutional neural networks applied to visual processing was rejected, even though it delivered the best benchmark result, because the system had learnt everything, but failed to deliver an algorithm. The proof was there that it worked, but it did not conform to what the academic community believed was true. With the accelerating performance of computer hardware to process neural nets, these have really started to deliver. Now very quickly we find that neural nets can deliver across the board in all areas of cognition. This is counter-intuitive but to consider one analogy, you can create a highly regular square wave by combining large numbers of sine waves. In the same sense a mush of millions of neurons could be imagined to represent the functionality of any highly regular formal logical framework or task.

Part of the disturbance of generative AI is the relationship of reasoning and static knowledge. Without any real meaningful model, the assumption was that reasoning was separate to static knowledge. It was just that reasoning "used" static knowledge as a component to build reasoning. However it appears that reasoning is embedded in static knowledge in an unfathomable way. A static, learned neural database can engage in a wide range of reasoning tasks, all driven by the same simple probability-driven sentence completion mechanism. It seems no-one saw this coming and it’s hard to accept.

However we are also living in an exciting time, and this technology has much to offer for all of us. I implore people to engage with it and discover how it can enhance their abilities. Once you establish the habit of using it, you will find that you can complete work faster and improve the quality of your output. It is like having access to a Greek oracle that allows you to communicate with the gods. While this was always a fanciful notion, it was rooted in humanity's desire for knowledge. Throughout history, fake oracles have been used to try and gain access to knowledge that rulers did not possess. Now, the oracle has arrived, and it fits inside your smartphone.

(12) References

The field of useful inputs is likely to change week by week, so I'm going to suggest that you research these new inputs as they appear. YouTube is not a bad starting point and I would direct you to search for interviews of Geoffrey Hilton, the father of this type of AI. He recently resigned from Google so that he was able to freely talk about the risks of this technology. His delivery of his thoughts is very capable and digestible. He has created very good analogies to allow people to easily understand this technology and its import.

Jeff Rollason - May 2023