Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

An issue when dealing with thousands of millions of test instances of randomness is that the perception of randomness can be distorted by inevitable freak non-random looking patterns. This is more than something of casual interest but actually an issue that requires targeted engineering. This topic has already been touched on in our earlier articles Digging further into the Statistical Abyss and Backgammon Programs Cheat: Urban Myth??.

This article looks at how we have addressed the issue with some simple engineering ideas.

The Broad Problem

This is not just a topic of marginal curiosity but has consumed a huge volume of popular dissent, discussion and a fair bit of wrath. A notable recipient of such attention has been Apple with their iPod shuffle. In its original implementation the iPod did exactly what it claimed to: it randomly chose a song to play after each song finished. However, with maybe a few hundred songs on the device inevitably it is not long before you hear the same song twice in succession. Beyond just simply complaining about this, customers saw this as evidence of a conspiracy to push particular artists or songs by Apple (see CNET and BBC).

Apple's response to this problem was perhaps overly comprehensive. Now, rather than randomly choose a song on the fly, they have elected to create a randomly ordered list when shuffle is selected, rather like shuffling a deck of cards, predetermining the play order from then on. Songs are never played twice before all songs have been played...and users now complain about that.

AI Factory and Music

Music here is just a subset of this problem, but at AI Factory our solution to this specific instance has been a bridge between completely random and forced pre-order. This simply created a list of songs and as soon as a song was played it was pushed to the end of the list. If the list had "N" songs, then random choices were made from the first "N-X" songs, where X might be 5. In that case it was impossible for a song to be played again before 5 other songs had been played first.

Music is not our area, but if it was we would be re-using such a system, plus an option to mark songs as "Favourites" that got played more often and "less liked" that were played less often.

However our main drift here is not about music, as follows:

AI Factory and Random Dice and Deals

Our primary grief is the endless repeated claims that our games cheat and, in particular, our backgammon program dice rolls are not random and Spades and Hearts do not have random deals. This issue is already covered in previous articles, but there is no reasonable fix. We could apply the system used for music above, such that each of the double dice rolls would be marked like songs so that they did not immediately repeat. 2x double 6's in a row would be impossible. We could also detect "lucky" rolls in the same way so that the AI never had 2 or 3 lucky rolls in succession, but this is cheating and unnatural and would invite obviously justifiable valid criticism. We instead have to simply take it on the chin and try and educate our users why this appears to be happening.

AI Factory and Advertising

Happily this is an area where there is a serious problem but also a good fix. AI Factory serves up some 15 million adverts per day plus a comparable number of internal cross-promotion ads. The issue is very similar. We do not always show an ad, but where we do this randomly, we risk that ads may appear clustered. For example our cross-promotion ads typically have a variable chance of display of 20%, so that only about one in 5 times will users see an ad. However the chance of seeing 5 ads in succession is one in 3125. So with maybe hundreds of thousands of ads displayed, this is going to happen very often. It did happen and people complained about being saturated with ads.

Our Solution: De-Clustering

Our solution is simple: we track the recent history of ads displayed and weight each of these. The most recent is weighted 128, the one before 64 and one before that 32 and so on. This is then combined with the expected probability to skew the net probability to be lower if there is a previous recent history of ads being displayed. The exact formula for this is hard to describe, but the code is shown at the end of this article. "Log_Event" is used to create the bitmap of the recent ad history and "StopByCluster" to decide whether an ad should be displayed or not.

The impact of this is best conveyed by plotting the ad profile before and after de-clustering, as follows:

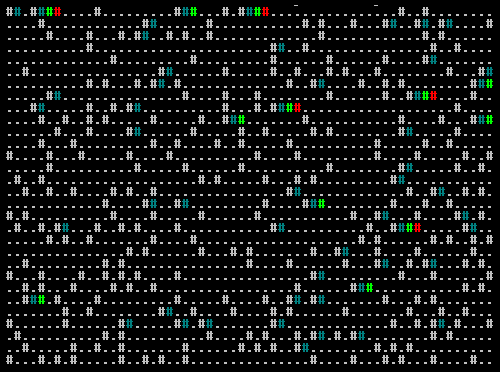

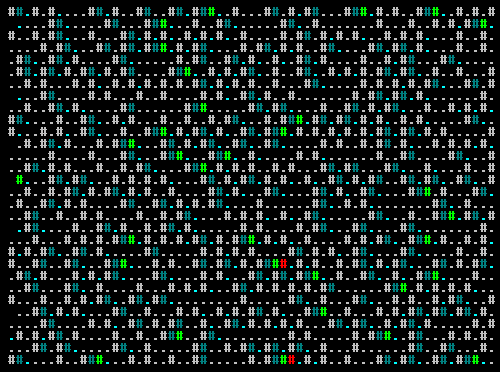

Before De-Clustering, 20% probability of event.

The above shows events as '#' and no event as '.', with events occurring randomly 20% of the time. Events shown in dark cyan are events that follow one other event (so 2 events), bright green after 3 successive events, bright red after 4 and backlit bright red after 5+ events (not shown in the above sample).

It can be seen that double events are quite frequent and that there are 12 triple events and 5 quad events, after less than a thousand event opportunities in total.

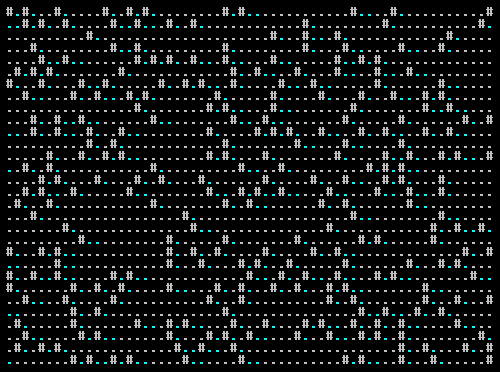

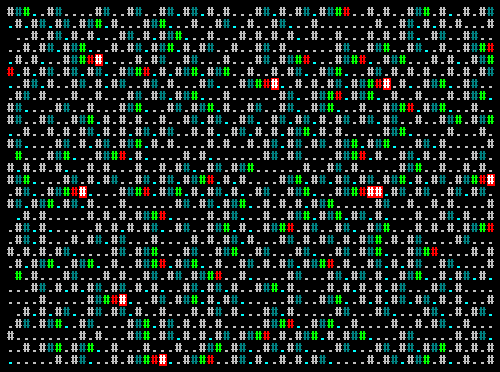

If we look at the exact same random profile but with de-clustering code (as shown in the sample at the end of the article), the display profile changes as follows:

After De-Clustering, 20% input probability of event. Net effective probability 15%

This marks a cluster intervention by a cyan ".". From this there are no simultaneous events. However the overall probability has reduced from 20% to 15%. If we increase the base probability to 25% below.

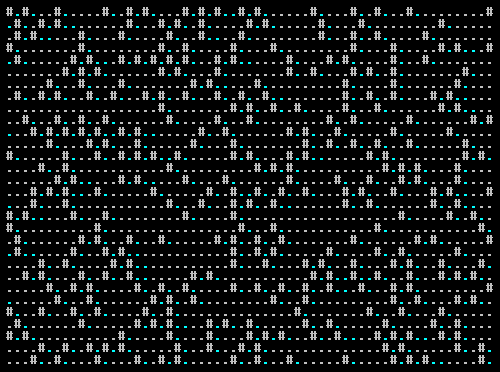

After De-Clustering, 25% input probability of event. Net effective probability 20%

Now we have brought up the target probability to the intended 20% but have maintained no paired events. For an ad distribution this is very satisfactory, with no annoying clustering of ads.

Of course these are not "stressed" examples. With a probability of 20% it is not so hard to de-cluster as there are plenty of empty slots that can be occupied.

If we instead push up the target probability to 50% then clustering becomes a problem, as follows:

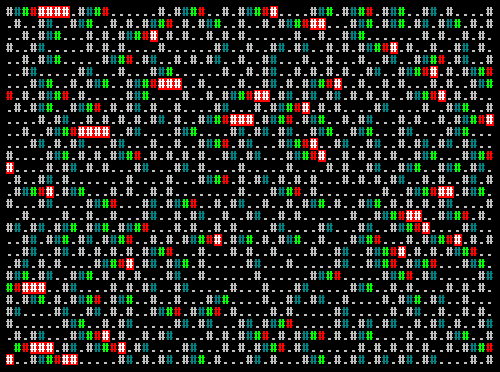

Before De-Clustering, 50% probability of event.

Here the distribution has broken down and we see a significant body of 5+ clusters. One of the above is even 8 long. If we apply the de-clustering to this we have:

After De-Clustering, 50% input probability of event. Net effective probability 41%

This is very obviously a big improvement with still many pairs and a fair number of triples but only two quad sequences. Of course the net probability has dropped to 41%. If we increase the input probability to restore the net 50% we have:

After De-Clustering, 58% input probability of event. Net effective probability 50%

This clearly has some significant impact and now we have 8 events of 5 in succession and 1 of 6, but this is a big improvement over the random distribution which had 29 events of 5 and 19 of 6+.

Conclusion

Net the new de-clustered profile is now no longer random, but users will perceive this as random. We control our cross-promotion ads using this code. The consequence is less annoyed customers, but they are still seeing the same number of ads. Note though that users that do not click on ads will gradually see such ads fall away. If the user never clicks on an ad then there is no point annoying them and have them then write a bad review.

The actual code for this is as follows:

Jeff Rollason - February 2015