Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

This article follows on from Evaluation options - Overview of methods from an earlier newsletter, which offers a recipe for successful AI evaluation development. That recipe highlighted what might go wrong with basic strategies, but here we look further into the logistics of actually delivering what you need.

As you progress, and the test framework becomes more capable and sophisticated, it is easy to forget that sometimes some older methods offered something that you are now missing.

It does not matter how long you have been doing this stuff; you may gradually get stronger, but the tightrope is still very narrow and it is still easy to fall. This article reflects on some further examples in the practicalities of AI development and shows how that, even with added experience, it is not hard to go astray.

Aspirations of Experience

Older projects can sometimes hit on powerful and effective solutions, naturally avoiding problems that without that framework it would be hard to anticipate. The default hope is that with gradual accumulated experience that all lessons are retained and that things can only get better. This notion depends on the premise that one understands the import of what one has done and therefore appreciates why it did or did not work.

The specific example in mind here is the successful development of "Shotest", which played Shogi (Japanese Chess) and successfully competed in the World Championship, twice ranked third in the world and a whisker way from actually becoming the World Champion. This was at its peak 1997-2002 and here is compared with more recent AI development carried out in other games playing domains. See Developing Competition-Level Games Intelligence.

This development was a good success, but what did it offer?

Shotest Shogi - Japanese Chess

I took on this project back in 1996 having not even heard of the game, but with some substantial experience in western computer Chess. My ideas in computer Chess had not made many waves, but were unusual and I had taken games from the existing World Champion program "Belle". As it happens the ideas developed for Chess were better suited to Shogi than Chess, so I started from a good place.

Development Constraints

| (1) | Available hardware | |

| Back in 1997 PCs were expensive and, unless you were part of some large enterprise, you needed to be able to test results on modest hardware. In this case I had a Pentium Pro PC running at 200MHz, and limited access to some other PCs running at between 90MHz to 120MHz. Consider that this development was aimed at providing a program that could compete in a competition where a game may take 60 minutes in total, then testability with such limited hardware was an issue. | ||

| (2) | Background in Shogi | |

| As indicated before, I had not even heard of this game and did not know anyone that did. I could attend the infrequent Shogi club at the Japanese embassy, but this is relatively rarified exposure and does not substitute for a lifetime already playing the game. | ||

| (3) | Good background in Computer Chess | |

| This is a great help and benefited from previous development experience developing Chess programs in Fortran and assembler. Much of that early experience was built on hand-written notebooks. | ||

A plan for assessing test results

With limited hardware any idea of assessing results from just win/loss rates becomes unreachable and fairly absurd. An overnight run on one PC playing 2 versions against each other might deliver some 10 games, which might offer a 6:4 win, which would be meaningless. Even 10 nights work would only give 100 games and still this would contribute little if you were trying to detect an improvement of a few percent. Testing on win/loss is like having 2 types of the same sized spheres and calculating which is heavier by placing a large number of these in a jar and agitating to see which balls biases to the bottom of the jar. That gets the result but is a hammer cracking a nut and simply not possible if you only have 2 spheres. What I needed metaphorically was something that could achieve the same result with just 2 balls.

Squeezing results from very little data

In the testing cycle I could easily record games. These yielded win/loss results but also provided other inputs to some generic analysis. If you accumulate a number of games, you can compare them and determine where they differ and lead to different results. This becomes significant if you have a version A that randomly plays many games following a particular game variation. If that variation generally leads to a win then having a new version gain wins following this variation is not necessarily significant. You already expect to win in this variation. What you need is to detect where version B finds an improvement over version A, not just a me-to win.

ANALGAME.EXE for analysing results

With games stored as text files it became easy to read in blocks of test results and compare them. This provided a means of meaningfully comparing sets of results and also providing a tool that at a glance gave a visual feel to how the games had progressed.

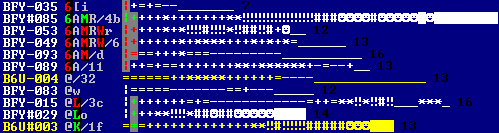

In the example crop below we are comparing how two versions of the same program, BFY and B6U, fared when playing against the same AI opponent.

The above has been collapsed to hide some information, but the left-hand column is the filename, which logs a win with a '#' in the filename and '-' for a loss. The next column, with entries such as '@L/3c' are a tokenised version of the game record (where /3c is an expanded token name after one character names are exhausted), so that the entire game there is reduced to just three game segments/tokens. This allows a quick way to visually compare similar adjacent games, reduced to the minimum possible token classification needed to distinguish the games. Where a token is marked in green it indicates that the program varied at that point to reach a win. If red, then it varied to find a loss.

In the final right-hand column is a string of tokens which reduces the game record to win/loss score sequence, so that a solid white block represents a very high positive game score (basically a win), a '#' is a strong positive score, '*' less positive and '-' losing and '_' more losing. This gives an instant impression of how the game progressed. BFY-053 above shows a game where it thought it was winning, but the game collapsed to a loss. BFY#029 shows a rapid progress to a win, whereas B6U#003 (from the other program version) also shows a win but it took much longer.

The on-line app allowed games to be stepped through within the app, so that you could examine the games and board position and get an instant view of how one game was different to another. This simple format allowed easy spotting of games that needed attention. A glance at the screen will instantly reveal a game where a winning position was lost and is therefore a candidate for analysis. Visibility is everything.

This structure offers the possibility of scoring how good some deviation actually was. At the bottom the game B6U#003 is a win, deviating from the BFY games, but finding a win where BFY already found a win. The B6U win was actually slower, but BFY also found a loss while following the same basic variation. So B6U has net appeared to do quite well so is awarded a value for this improvement. Note that if B6U had achieved a win in the variation around BFY-089 above it would have scored even higher as BFY is losing 4 games in this variation to only one win.

Using this data for assessing results

The results above can provide a sum of values for all wins for BFY and B6U. This is a better approximation of the relative merits of these two programs than just the win rate, as it is assessing the actual value of each win. Where one version scored wins where it did no better than the other version, the value of the wins is reduced to almost no value.

Note that the calibration of this rating system is very ad hoc. It has no mathematical basis but simply assigns intuitively "reasonable" values.

Expanding the values of these results

However this opened up another interesting possibility. We have compared BFY and B6U, but these are still vulnerable to reduced statistical significance. What we can do is also compare BFY with another BFG set of games and also B6U with BFG. This provides 3 sets of results. This can be combined into a single rating file, as follows

BFY 5.3 B6U 14.0 B6U 2.7 BFG 10.1 BFY 26.2 BFG 1.4

From above B6U beat BFY by a margin of 14.0 points to 5.3, but BFG beats B6U. Finally BFY completely beats BFG. These results can be passed to the ELO-style program RATING.EXE to analyse these results, as follows:

1. BFY 2139 (31.5 pts) *********************************************** 2. B6U 2118 (16.7 pts) ********************************************* 3. BFG 1745 (11.5 pts) ******************

From this, BFY is rated higher than B6U even though head-to-head it does not do so well.

A single set of games can be thereby compared with many other sets of games and some sets can even be merged into single sets for re-comparison. This allows just a few new games to deliver a great deal of comparative information.

Exploiting this further

As a side effect of the fact that the world championship games were played across serial cables, the program needed to be able to play games across the serial interface, so that versions of programs could be made distinct binaries playing each other, rather than setting up some internal autoplay. Binaries could be created and stored. Since these were locked they could be recovered for re-testing as needed. Evaluation variations could be embedded in distinct uncorruptable binaries.

The next step is to measure the value of specific evaluation components. In the modern fireball testbed framework we have now, evaluation components are generally quickly and easily switched on and off on-line, but this can be very complex and risks that adding some new switch will potentially corrupt some other term.

Without any on-line selection of evaluation switches, this was instead controlled by hundreds of compilation switches such as these below.

#define Nor 1 // 1 knights ORed during blocks (was Kbl=5) #define Qav 0 // 1 quad uses mean of all quad in evaluation #define Pw0 3 // (fixed) 3 1/2/3/4 pawn worth nothing if opp pawns in hand #define KcF 1 // 1 king attacked by target bug fix #define Hsc 2 // 2 history scaling #define Tmv 0 // revert capt of tied pc if tied target capt/move

This made very efficient binaries (no redundant code) and offered another opportunity, to evaluate individual terms against many game sets. The version number of the program could be linked to a change in a single term and the impact of that term measured against many sets of games. To manage this, another program analyses the source files to determine which switches are active and which are not.

This now allows rating of evaluation terms, rather than program versions.

Why do this?

A problem with any new evaluation term, which might even just be a bug fix, is that it may appear to make the program weaker where commonsense might expect it to make it stronger. This allows evaluation terms to be left disabled and re-enabled and re-tested later. At some point the term may well result in better play.

This is all managed by a program that automatically analyses the switch files (of name BFG.h etc) so that it can detect which program versions have which switches and can even recommend what switches may be worth testing.

Book creation

This same system can also be used to create opening books. The ANALGAME.EXE program has a command that allows a game to be exported if it is to be included in a book. Which lines are to be added can be obvious from the display output. These can be re-compiled into older program versions and the testing cycle repeated.

So older versions could be improved and re-cycled for re-testing by simply narrowing the positions reached to games where the program performed well.

How good was this?

This unorthodox procedure had one great advantage in that it was reliable and self driving. It automated result testing so that assessments of terms and version easily and reliably dropped out.

This required few decisions on the part of the developer as the analysis table invited the user to test combinations of switches and provided an automatic cross-reference of the result against all previous results. Any new test set, even if quite small, offered a meaningful comparison with all existing test data. This was very sensitive.

How does this compare to our modern Fireball setup?

Firstly the setup above yielded results: the program performed well in the World Championship having few poor games and winning enough to come close to winning. It beat 3 World Champions in the year that they were World Champion and one other a few years after it was World Champion. It reliably performed in all parts of the game, showing fewer weaknesses than most of its opponents.

So the results were good but the automation of testing was also very good. In our modern flexible framework we can instantly test any feature we like but we do not have the above driving mechanism to allow automated comparisons of data sets. Until you get embroiled with this it is hard to anticipate just how much of your time is actually spent just manually comparing sets of results and choosing what to test. We do not have this automation, driven by game analysis.

The new framework does not have a serial or otherwise equivalent system to allow different versions to play each other. With this added it should be easier to reliably compare with older versions.

Instead we do have enormously powerful tracing and switching facilities that offer huge advantages, and were a massive step forward, but lack this automated capability.

An Achilles heel in the new system

The new system of switching terms on-line offers immediate flexibility but also hides a potential dangerous fault that simply could not occur in the Shotest Shogi development above. If all evaluation terms are embedded in the same source file and activated and disabled using on-line switches, there is a risk of accidentally corrupting the source through some bad edit. In the on-line framework this error may not be apparent as all test versions may be equally weakened by this corrupting error. In the Shotest framework such an error is not possible as the previous uncorrupted version will still exist and will beat the new corrupted version.

There is an analogue for this property that occurred in mathematics. The infamous example is that of William Shanks in 1873 who reputedly calculated 707 digits of Pi, but made an error on digit 507, after which all following digits were worthless. This error was not discovered until 1940. In that case he had no means of detecting his error so progressed with the futile exercise of calculating an additional 200 incorrect digits.

Making this type of invisible error can be catastrophic and very hard to spot. You could progress well past this development point and still fail to spot the defect.

Avoiding this problem

Although tedious, it is necessary to routinely test a newly modified version against a known test set, with the new feature disabled, to ensure that none of the previous code has been accidentally corrupted. This is most easily done using a checksum approach by comparing a net final value that is a checksum of the entire analysis.

This solves the problem, but still needs user intervention. In some special circumstances such a check may not be obvious to synthesise, so there is always a risk of procedural failure. Again human frailty comes in. The procedure is tedious and you might convince yourself that it is not needed. Without an automatic compulsion such a test can be easily overlooked or unwisely skipped to speed up development.

It is that narrow high wire that you can kid yourself you will never fall off.

Conclusion

The old Shotest system was heavily constrained to limited test equipment and compelled development of serial-based testing. This was meshed with a test procedure that created a system that automatically drove the development with little need for development administration. At the time there were no other obvious ways to proceed but the framework was better than I had imagined. Unless you lose this automated system, it is hard to anticipate just how valuable it was. Of course this old system does not have the extraordinary on-line switching and tracing capability of the new system, which is indispensable, but the automation was very effective.

The system of testing terms that were then disabled and re-tested later also worked very well. However the need for this is not intuitively obvious. It may seem reasonable that a term be added and tested and then discarded if it fails. The system above allowed a multitude of terms to be suspended and then recalled later. The automation made it easier to spot these candidates and then easy to re-test them. Any new test could be cross-referenced with countless existing game records and a reliable measure of its value obtained.

This is now ancient history and at the time the success of the project surprised me and most people at the World Championships, including our publisher. Having followed this single path it was not really obvious why we succeeded so well but subsequent time spent in a different framework has exposed the value of this system and made us understand more about what works in evaluation development and why that system so capably delivered such a strong program.

Jeff Rollason - September 2013