Computers play games using many techniques, but a key one frequently used is hill climbing. The premise of this is that a game may not be solvable to a solution in a single move evaluation (as is possible for games such as Tic-tac-toe), so that the evaluation has to assess intermediate sub-win states that will eventually lead the player to a win. The game engine therefore aims with each move to reach the position nearest to a win (solving a partial solution). Having got there it repeats the same process selecting moves until it reaches the win state.

In evaluation terms, if a win equates to 10 points, where you start with zero points, then the game engine will progressively make moves that gradually change the value of the position from 0 to 10.

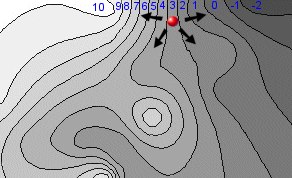

This process can be represented by an analogy of a hill-walker looking for the summit. In the diagram below the hill-walker can choose to move in multiple directions, seeking the summit (10).

In this instance we can assume that the hill-walker cannot see very far, so can only assess the gradient immediately around his position. In this instance the hill-walker can choose from a selection of directions and choose to walk to the left, which offers the most elevated new position. In this case from "3" to "4". The hill-walker moves and repeats the process and clearly, in a few moves, will reach the summit "10" on the map.

The hill here represents the evaluation function for the program which returns a value that tells it how close it is to a win.

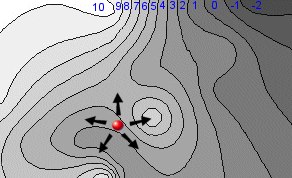

In an ideal world, with an ideal evaluation function, the hill would be very simple, offering a single summit with a uniform continuous gradient (a huge cone, in fact)). Given this the program could easily reach the summit (win). However in the diagram above this is clearly not the case:

If the hill-walker starts from the different position shown above, he will clearly reach a lower local maxima, a "false summit". From there he cannot reach the final summit.

This is the start of a number of possible problems. In this instance the hill-walker can overcome the problem by looking further ahead. In evaluation terms this means performing a tree search such that a sequence of predicted moves allows the hill-walker to find the real more distant summit. In this case a deeper search (prediction of many successive moves), could solve this problem.

However the situation above still implies a relatively regular and reliable evaluation gradient. This is not generally the case:

Evaluation potholes

The nice smooth evaluation gradient above assumes some well-behaved evaluation that delivers a smooth analogue value. In practice evaluations are usually a combination of terms that are simply added together. If each term is independent, then this can be managed, but often as not they are linked. A typical problem arises when assessing latent and actual features. The following chess position is such an example:

In this position an evaluation feature might be a credit for a rook being able to occupy an open file. The rook at e8 has the option of occupying d8, so gains this credit. However if the rook actually moves to d8, it will lose this credit and instead get evaluation credit for attacking the squares d5, d6 and d7 and the pawn on d4. In this case a credit of (say) 8 points for the ability to occupy the open file may be replaced by 30 points for actually occupying the file. This would then not cause a problem, as the program could progress from opportunity to occupying the file by simply climbing the evaluation gradient.

However this might not be so. The actual value of occupying the file might be less than expected, so the opportunity to occupy the file may be more than actually occupying it. This is an evaluation pothole, and may make the program stop progressing as it prefers the opportunity to occupy the file to actually occupying it. In consequence the program may well move the rook from this file, simply to gain the opportunity to do it, rather than actually do it.

This is a simple case, but sometimes these potholes can be very deep! Also the situation is actually quite common. The symptoms of this are hard to read, as the program may still progress, but may be confused as a tree search endlessly experiments with trading feature opportunities with the actual feature. This may make the program dither or simply slow up the tree search.

Fixing the Pot Holes

The example above was quite simple, but this gradient failure may be the product of multiple combined terms. You may be trading latent and actual threats, but you may also be simply trading different features. The only way to address this is to test your program, by displaying the net value of moves from given positions. This can be run for a whole game.

The programmer then needs to carefully examine this list of moves and the net change in value to detect anomalies. You may find that a move, which is obviously beneficial, actually has a negative net score. Once found, you need to display all the evaluation terms used to derive this to detect why the move is apparently bad and correct the anomaly.

This requires a lot of patient work, but the benefit is a program that will not seem to habitually get lost or seemingly hesitate. Of course evaluation functions change with tuning, so this is a process you may need to return to many times.

Jumping over potholes

Of course, regardless of the scrutiny you may apply, there will always be potholes, so you need other methods to avoid these.

1. Tree Search

Of course, if you predict many moves in advance, you can find the way in and way out of potholes. However this is not a substitute for fixing them! Potholes create hesitant play and slow up tree search as the search gets confused.

2. Predicting where potholes are

This assumes a tree search, and one that is selective in the moves it examines. Typically the evaluation function not only delivers final evaluations but is also used to guide the search. You should have another secondary means of move selection. This comes under "plausibility analysis", which will be discussed in a later article, and this provides a second opinion of which moves are to be examined. This second opinion is a safeguard, as it will probably not share the same flaw as the evaluation function.

3. Detecting potholes during search

Evaluation anomalies can reveal themselves in the search. If the search tracks evaluation changes, it can spot when an evaluation apparently dips between moves. This can be recorded, so that in future the move that caused the dip can be flagged as a pothole, so that at future times the search will know that the move assessment will seem worse than it really is.

Conclusion

Constructing evaluation functions is both an art and a science. Getting it right requires experience and good judgement, backed-up with good engineering practice. A key necessity is careful testing of your evaluation function. Do not leave it for the tree search to expose this, as the defective evaluation will be hard to expose by trying to assess sub-optimal moves selected by search.

Hill-climbing has a good evaluation partner in the attractive and super-fast "piece position tables", which avoids potholes above. However this is its own important technique and will be discussed in a future article.

Jeff Rollason: October 2005