Author: Jeff Rollason - Updated 9th October 2023

This is a general review of the universal issue that all Backgammon programs are accused of cheating. This looks at the general issue of why it happens and why almost certainly no Backgammon programs actually cheat. We also detail the measures AI Factory has taken to address this and detail how users can run tests to prove that AI Factory's program does not cheat.

| Gaming Laboratories International tested our Backgammon Free (version 2.241 published on October 5, 2017) for fair play. You can read the results here: www.aifactory.co.uk/gli.htm | (click icon above) |

We have an important update to share: To the existing options Auto Dice and Manual Dice we have added "Manual Seed". This lets users set their own random seed to control the dice, allowing them to replay any one game multiple times and experiment with different strategies against the same set of dice throws. It also allows a much more convenient alternative to Manual Dice, giving the player control of the dice without needing external dice. We have also added a full Review Round mode, so that users can re-examine games and even export them to a text file.

However a crucial aspect of the seed enhancement is that it also allows users who are concerned about the fairness of dice throws or program play to verify that the dice have not been tampered with and that the program cannot see future dice throws. These are rock solid tests designed to conclusively prove complete fairness. This feature will only impact a small portion of our users, but it will allow them to prove to themselves that our program does not cheat.

The use of this important new feature is explained here.

Background

Unfortunately one common feature shared by ALL Backgammon programs is that they are ALL aggressively accused of cheating. Even the top world-class programs that compete in human competition using real dice attract such claims (see www.bkgm.com/rgb/rgb.cgi?menu+computerdice).

We are therefore here jumping to both our defence and also to the defence of our competitors as well. Of course we have already backed up our own defence by allowing users to test and conclusively prove that our program does not cheat, which you can read about here.

Why Cheat?

Why indeed cheat? This is one of the easier games to program and most reasonably capable games programmers could do a reasonable job creating a viable Backgammon-playing program. As a general guide, the better the program, the more likely it is that people will accuse it of cheating.

Considering our position, AI Factory has a strong background in the field of artificial intelligence. We have published in prestigious AI journals, lectured on AI at Universities around the world, and competed in AI tournaments, earning a world ranking of #3 in Shogi twice and winning Gold, Silver, and Bronze medals in computer Olympiads. You can read more about this here.

Given our expertise, it would be absurd for us to create a relatively simpler game like Backgammon and have it cheat. We would have no reason to do so, as it would only damage our product for no benefit. This applies to all other Backgammon programs as well, almost all of which were created with a weaker AI background than ours.

The Universal Hostile Review Traffic, hitting all Backgammon Programs

As you might imagine, we have received a large number of hostile reviews accusing our program of cheating. However, we have suffered much less than some of our competitors, who seem to have been driven to distraction trying to counter these claims. In the last 2 years (up to August 2023), we have received 1030 claims that our Backgammon app cheats. This represents only 0.04% of all our Backgammon users over this time, so it's a very small group. 99.96% did not complain. We also had 101 users who took the time to post claims defending us, saying that the game does not cheat.

The Disproportionate Impact of Bad Reviews

While 0.04% may seem like a low percentage, about 40% of all our reviews claim that we cheat. We have even received death threats! This percentage is lower than it might be because we have had the program GLI certified as "fair play", provided detailed statistics, and explained in depth why it does not cheat. Our competitors, who have not taken such rigorous steps, have about 90% of all their reviews claiming cheating. This high percentage is only diluted by complaints of other serious flaws instead.

This is absurd, given that almost certainly none of them cheat!

Why This Might be Interesting

However now we are stepping back and assessing why this somewhat odd situation has arisen.

This article examines the phenomenon of misplaced accusations of cheating against all Backgammon programs. This is not just a case of mass hysteria, but is driven by issues in human perception and the somewhat opaque nature of good statistical Backgammon play. The topic is interesting and not immediately obvious.

The sections below analyse this issue in detail. However, users may prefer to jump directly to the FAQ section below, which explains the nature of cheating claims and how they can be debunked for our program.

So why are programs accused of cheating?

This divides into multiple reasons, but of course the root of much of the suspicion lies in whether the dice are fair. Part of this stems from whether they are random or not.

How good are humans at detecting whether or not numbers are random?

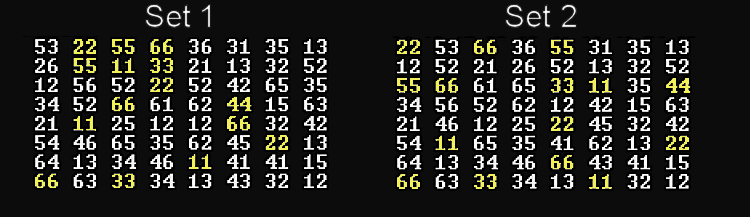

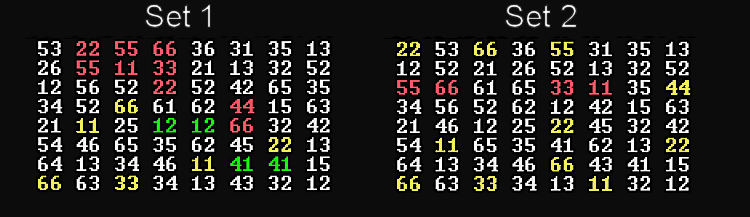

One issue in fair dice is how often doubles occur. Take a look at these two sets of numbers below (doubles are marked in yellow). One of these sets of dice rolls is random and the other is not. Can you tell which is which? When you have decided, jump down to the last section of this article and check your result.

Primary reasons for believing programs cheat:

(a) The impact of 120,000 players per day

(b) The deliberate play by the program to each favourable positions

(c) Apophenia - Human perception of patterns

(a) The impact of 120,000 players per day

This sounds like a curious basis for proposing a reason for perceived cheating, but is very real. Our Backgammon has some 120,000 players per day. With that many people playing it is inevitable some of them will see what appear to be unfair rolls. If all these 120,000 players were playing in the same room, then any player thinking they see a bias in the rolls could talk to the people near them and quickly find no-one else saw this bias. They would then realise that it was not cheating and they had just seen unlucky rolls.

However everyone is only connected via Google Play review and players mostly only get to see those that complain.

This effect is actually recognised by Littlewood's Law, formulated by British mathematician John Edensor Littlewood, a principle used to predict the frequency of extremely rare events. Single events may look like "miracles" but with many possible observers (or events) the event can then become highly probable.

That effect could obviously be a problem for an app with so many users, but we took that one step further to actually quantify it.

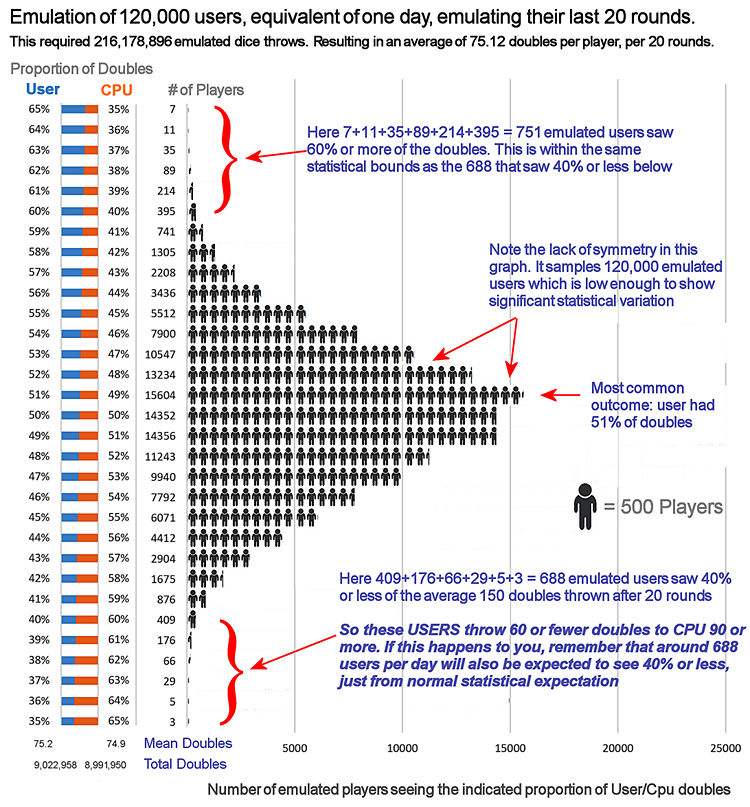

So we set up a massive test within the app to emulate a block of users playing 20 rounds of Backgammon and logging the number of doubles for the CPU and the player. This was repeated for 120,000 emulated players totalling 120,000 x 20 = 2,400,000 rounds. Each block of 20 rounds averaged 75.12 doubles rolled each. In all this required 216,178,896 dice rolls. These are the results:

What should jump out is that for 120,000 players, some 688 of them will only see 40% or worse proportion of all doubles thrown, just from expected statistical variation. There are 120,000 players per day that might write a review. The vast bulk of these will just play the game for fun and will not be counting doubles. Another huge bulk will see dice rolls that vary between slightly favourable for the player and slightly favourable for the CPU. A much smaller section will see the player getting much better rolls (they are very unlikely to review about this), and finally that very small section that see the CPU getting much better rolls, just by statistical chance, then write a bad review saying the dice are rigged. From above we should expect 688 players getting 40% or less of the doubles. It might look bad that 688 players will see such bad dice, however the dice rolling was fair. It was just that they got unlucky.

The concept may still be difficult to visualise, so we have another way of demonstrating it. The grid below represents 120,000 players playing per day, with each player represented by a grey dot. Players who would expect to receive only 40% of the last 150 doubles by random chance are marked with a red dot (688 players). Of these 688 players, 3 are marked with a "cheat" bubble. We receive about 3 accusations of cheating per day, but these are just 3 players out of the 688 who would normally expect to see such a biased skew in favor of the CPU due to normal statistical variation. We can single out this group of 3 and consider if the outcome was unexpected or was reasonable.

What that group of 3 saw is not significant as it was inevitable that there would have been about 3 players that saw this level of bias. It is very definitely not evidence of cheating.

(b) The deliberate play by the program to reach favourable positions

This reason is much more subtle, but still real and is a key side effect of the nature of the game. Paradoxically, the stronger a program is, the greater the impression is that it might be cheating. This is not because human players are being bad losers but a statistical phenomenon. When you play a strong program (or a strong human player) you will find that you are repeatedly "unlucky" and that the program is repeatedly "lucky". You will find very often that the program gets the dice it needs to progress while you don't. This may seem like a never-ending streak of bad luck.

Note! This is not like section (a) above, where a cited single statistical random result seen by one user is invalidated because 120k daily players have an opportunity to generate the same result, but most will not see it because it was just by random chance. This skew is instead a uniform real effect, not a random anomaly, and so all of the 120k simultaneous daily users will be able to see this happening to some degree.

Surely this must be cheating??

No, it is not. What is happening is that the program is making good probability decisions, as a strong human player might. It is making plays which leave its own counters in places where it has a high probability that follow-on throws will allow it to successfully continue, and that also obstruct the chance that its opponent will be able to continue.

The net effect is that your dice throws frequently do not do you any good whereas the program happily gets the dice throws it needs to progress.

This may be hard to visualise. After all it is not chess, where a program can exactly know what is going to happen. In Backgammon the program does not know what the future dice throws will be, so it needs to assess ALL possible throws that might happen and play its counters with the current dice so that it leaves the best average outcome for all possible future dice throws.

How are these distributions used?

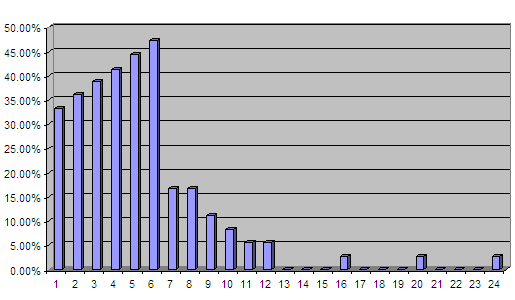

Key to both allowing your counters to get to the squares they need, and blocking opponent plays, is the distribution of dice throws. From schooldays everyone knows that with a single dice all the numbers 1 to 6 have equal probability and that with two dice the most likely throw is a 7, with 6 or 8 next most likely, in a nice symmetrical distribution of probabilities.

However the distribution of likelihood of achieving a value with 2 dice, where you can use either a single dice or both dice, is not so immediately obvious. See below:

Here the most likely value to achieve is a 6, with 5 next most likely, but 7 is now a low likelihood. Computer Backgammon programs know this and will try to arrange their pieces such that they are spaced to take advantage of this distribution. A program will not want to put a pair of single counters next to each other but will instead try and put them 6 squares apart.

The overall net effect is that, on average, the program makes good probability choices and consequently will have a better chance of good positions after the next throw, whereas at the same time the human opponent will have inferior chances.

These good AI choices will mean that your counters are restricted with lower chances of getting dice throws that allow them to move, whereas the program's counters leave themselves on points where they have a high probability of getting a dice throw that allows them to proceed.

This looks like plain "bad luck", but is actually because of good play by your opponent.

(c) Apophenia - Human perception of patterns

The answer to the earlier test for random or non-random answer is shown below:

Set 1 was the random set. Features of both sets are marked in colour as follows, in this priority order:

Where 2 doubles are next to each other they are coloured in red. Where 2 identical rolls are next to each other they are marked in green and finally all other doubles are marked in yellow.

Set 2 actually has exactly the same dice rolls, but has simply been put through declustering to move similar dice rolls away from each other. They have the same dice rolls, but are just ordered differently. However in searching for non-randomness the human eye most easily imagines that Set 1 is non-random as it detects more obvious patterns. But the human eye should be more suspicious of Set 2 as it fails to show patterns that randomness would produce!

There is a credible biological reason for this. Humans and probably all species are over-sensitive to patterns, giving them survival advantages. This is an understood phenomenon, "Apophenia", as follows:

Apophenia is a term used to describe the tendency or inclination of individuals to perceive patterns or connections in random or unrelated data or events. It refers to the human tendency to find meaningful patterns and significance in various stimuli, even when there may not be any actual correlation or connection.

The term "apophenia" was coined by the German psychiatrist Klaus Conrad in the early 1950s. He originally used it to describe the tendency of individuals with schizophrenia to perceive false connections and see patterns in meaningless or random stimuli.

However apophenia is not limited to individuals with mental health conditions and can be observed in the general population. It is a cognitive bias that affects how people interpret and understand the world around them. The human brain is wired to recognise patterns, and sometimes this pattern-seeking behaviour leads to the perception of connections or meanings that are not based on objective evidence.

To put this in a biological context, a caveman might approach a wood and his brain might accurately assess that there is a 10% chance that there is a tiger hiding in the wood. However the brain transmits this as a 90% chance and the danger is avoided. If the caveman that receives the signal of a 10% chance then enters the wood, 10% of such individuals die. Over a period of generations the cavemen that received the exaggerated signals do not die and they become the dominant surviving group that pass on their genes.

Conclusion and External References

The reality is that it is almost certain that all claims of cheating are misplaced and that the appearance of cheating is actually a consequence of so many people playing inevitably eventually creating some instances of extraordinary luck and also potentially evidence that the program is playing well, making very good probability decisions.

Do not just take our word for it. This is widely discussed in the Computer Backgammon world as follows:

# Backgammon Article: which lead to sections addressing why programs are accused of cheating

www.bkgm.com/articles/page6.html

# Computer Dice: www.bkgm.com/rgb/rgb.cgi?menu+computerdice

(this sub-link of the above lists discussion of claims of cheating against all the most serious Backgammon programs)

# Computer Dice forum from rec.games.backgammon:

www.bkgm.com/rgb/rgb.cgi?view+546

raising the issue of claims of cheating and how to address this.

Footnotes:

A full history of the first 15 years can be read here 15 years of AI Factory.

AI Factory was incorporated in 2003. The academic foundation comes from Jeff Rollason, who lectured in Computer Science at Westfield College UOL (University of London) and then at Kings College UOL, between 1980 and 2001, the latter where he lectured in computer architecture for the second largest course in the department. He also supervised many Artificial Intelligence projects. He has lectured and presented papers at Northeastern University, Imperial College London University, Queen Mary London University, Tokyo University of Technology, Curreac Hamamatsu Japan and game conferences such as the AI Summit at GDC. This is the AI Factory list of publications. Note also AI Factory's periodical.

AI Factory's primary objective was to create and licence Artificial Intelligent (AI) game engines to game companies. Among their customers was Microsoft who took AI Factory's chess engine "Treebeard" for use in their MSN Chess. They later commissioned AI Factory to write a poker engine for their Xbox product "Full House Poker". AI Factory also created an AI simulation of a fish tank for the Japanese publisher Unbalance. In the 20 years since inception AI Factory has created many game engines and now primarily publishes to Google Play directly, but you will find their engines on Xbox, PC, Japanese mobile, Sony PSP, Symbian, Gizmondo, in-flight entertainment, Antix, Leapfrog, iOS, Android, Andy Pad and Amazon Fire

The AI from AI Factory stems from Jeff Rollason's background in AI. He had authored Chess, Go and Shogi programs that had all competed in international competitions. The most noteworthy of these was the Shogi program "Shotest" that competed in many Computer Shogi tournaments in Tokyo, Japan, where in 1998 it came 3rd from 35 competing programs and 1999 also 3rd from 40 programs, coming very close in 1998 to winning the world championship outright as in the last round only Shotest and IS Shogi could win and Shotest had already beaten AI Shogi in an earlier round. Shogi is a substantially greater AI challenge than Chess, but less than Go, because the theoretical maximum number of moves is 593 (average 80) compared to 218 (average 30) for chess. This greatly increases the game complexity. Shotest has achieved the highest World ranking for a western Shogi program, beating 3 World Champions in the year they were World Champion.

From this it can be seen that AI Factory has both a strong industrial and academic background, having created a solid base of credible AI.

FAQ for Backgammon: Your important questions answered

With so many reviews accusing us of cheating, surely they must be right?

How are the random numbers generated in games?

How can I use the new Manual Seed to verify that the program does not cheat?

Why might a game developer program their Backgammon program to cheat?

How difficult is it to write a reasonable Backgammon program that does not cheat?

Why didn't AI Factory use cheating in their product?

Why did AI Factory have Gaming Labs International test their program for cheating??

Why might it seem like the program is frequently getting the throws it needs?

Ways to cheat: (1) Rigging the dice throws

Ways to cheat: (2) Allowing the program to see upcoming dice throws

Ways to cheat: (3) Rigging by using seeds that embed cheating or by pre-selecting seeds

Has AI Factory really covered all avenues to affirm their product does not cheat?

With so many reviews accusing us of cheating, surely they must be right?

This question is already pre-empted by our addition of RNG (random number generator) seed setting (below), which allows users to prove that our app does not cheat. However this section looks at the general malaise faced by all Backgammon programs.

120,000 Simultaneous Users?

At first glance, this question's assumption might seem reasonable. It's natural to assume that if you believe something and find that many others share your belief, then you must be correct. This is reasonable "common sense". However, this is actually a matter of exposure. If all 120,000 of our daily players were in the same location playing the game, you might notice some bias and mention it to the players near you, but they might not see it. As a result, you would dismiss your bad luck as insignificant.

On the other hand, if all these players were in different locations and connected to Google Play, and those who noticed some bias wrote a review accusing the game of cheating, then you would only see the reviews of people who had the same experience. These people would represent only a tiny fraction of all players. Players who don't see any bias wouldn't be motivated to go to Google Play and write a review just to say that the game is fair because they have no reason to do so. That being said, we have had about 100 people who, after seeing the cheating accusations, went to Google Play to say that the game does not cheat. We are grateful for their support!

The point is that if players were exposed to all other players, they would quickly realise (and correctly) that the game does not cheat because almost anyone they ask will not have seen the bias they saw. Therefore, it was just chance, not cheating.

Littlewood's Law of Miracles

This effect is actually recognised by Littlewood's Law, formulated by British mathematician John Edensor Littlewood, a principle used to predict the frequency of extremely rare events. Single events may look like "miracles" but with many possible observers (or events) the event can then become highly probable.

How are the random numbers generated in games?

In an ideal world, games would use atomic quantum events to generate random numbers. However, this is not a practical solution as it would require an online lookup from a server. The device may not always be connected to the internet, and even if it was, such a lookup could introduce delays. Instead, the program uses a standard Linear congruential generator (LCR) RNG algorithm to generate dice throws. This is seeded each time by the time in milliseconds since the device was turned on. Each possible seed value delivers a different set of dice rolls. This is a common choice for games and provides good pseudo-random numbers, making it ideal for emulating dice throws.

This is a small detail, but worth mentioning. Programmers are used to working with such random number generators and they know that these have to be "seeded" at the start. Historically this was set by the C89/C90 ISO/IEC 9899:1990 for the language C in 1990, with the srand() seeding function. So programmers have been using this for over 30 years. However srand() tends to be called just once, giving the impression that random number generators are only seeded once. However every time a random number is generated, that random number is then used to re-seed the random number generator ready for the next number. Therefore random number generators are being endlessly re-seeded. Its a small detail, but one that should make them easier to comprehend.

This section explains how to perform the tests and why they work. (Click here to instead jump to the full Backgammon FAQ)

At the core of this is the fact that essentially all games programs that use dice rely on random number generators, such as the LCR type defined above. If you provide the generator with a specific seed value, it will determine all subsequent dice throws. By inputting the same seed from a previous game into a new game, you can see the same sequence of dice throws. If there is no deviation from the dice thrown, this serves as proof that none of the dice in the original game were tampered with. Our app seeds this random number generator with the time in milliseconds since the phone was switched on. This is almost certainly the method used by all apps.

While our program has been extensively verified by GLI to not cheat, we understand that many users might prefer to prove this for themselves. The Manual Seed setting provides a definitive way for players to do this.

First it should be said that there are a number of ways a program might try and cheat, but the only viable methods are (a) and (b) below, which can be easily detected using the seed test we have provided. However there are other much weaker methods that an app could try and use to cheat and these are also described here.

Note also, as an aside: We are only introducing this facility to allow players to run these tests now, 13 years after the product was first released. We have however been accused of cheating for all of those 13 years! If we had had any serious intention to cheat, we would clearly have only used the powerful methods (a) and (b) below as the only credible ways to do this. Our tests provided now debunk that. It would have been a waste of time for us to consider the ineffective methods listed under (c) as they scarcely work and are so easy to quickly detect using just statistics. However the latter methods under (c) are still discussed and analysed here as they are at least interesting, even if no-one would use them.

There are 3 main ways that a program could try and cheat, by:

You can conclusively prove that our app does not use cheat methods (a) or (b) by using a simple Manual Seed Setting test. The third possible method (c) above also offers another much weaker way to cheat and this is dealt with in that linked section above.

The section below now immediately deals with (a) and (b) above.

(a) Testing for rigging dice throws by choosing better dice during play

(This method is also discussed here)

To verify that the dice throws are not being rigged, you need to compare the dice rolls for one seed in one game with another game that has different plays but uses the same round seed. The dice rolls will therefore be the same, but the moves will be different. This will show that you get the same rolls in both games, proving that the dice are fair.

Create a Reference Game

(1) Select a game to test

To create a reference game for comparison, you can randomly select the game to test at a time of your choosing. This avoids the possibility that the program might conceivably decide not to cheat for the first game, expecting you to test right away.

You can choose a game to test before you start playing, or during play if you see some suspicious play that you would like to test. If you choose the game after it has started, you will need to make sure you can obtain all moves and dice rolls already played. In that case you will need to make sure you can obtain all moves and dice rolled already played.

If your chosen game is part-way through, because you found a suspicious play, you will need to:

(i) If playing level 7, undo and replay all moves (if you can recall them). The program will then deliver the same dice on replay, or:

(ii) Drop into the menu option Round Review to list previous plays so you can write them down or export them, or:

(iii) Simply just continue from that point and later on obtain the moves from Round Review when complete. This is the easiest option.

(2) Record all dice rolls in the game (or round)

Having decided "this is the game I want to test" at the start of a game, or stopped mid-round to test a suspicious situation, you will need the round record of all the dice thrown.

You can choose from 3 different options a, b, and c below to obtain this:

(a) Write dice rolls down as you play so you have a physical external record, OR: (b) below

|

We strongly recommended that you drop into Menu, Options (wrench icon on action bar) and select Double Button Next To Roll Button so that you can still see the CPU dice displayed after the CPU has played. This will make it easier to write down every dice roll. You might also note down which player had which dice rolls, to help make sure that no dice rolls are missed. If you had decided to wait for something suspicious during play, rather than choosing to test a round as it starts, then if you were playing level 7 you can undo to the start and then list the dice rolls as you redo the round. To replicate the round exactly, you will need to be able to remember all your moves correctly. This is level 7 only because only level 7 will replay exactly the same moves in response to your moves. Levels below 7 are deliberately weakened to introduce mistakes randomly in play. If not playing level 7 then you will have to instead pick up previous moves from Round Review. When you have a complete list you can compare it with Menu, Round Review to finally check that it appears to be correct. Round Review helpfully not only lists the moves but allows you to step through each play. We suggest you avoid auto-bearoff as moves will whisk past too quickly to record. |

(b) Export the game info from Copy to Clipboard button in Round Review and paste into a notes app for an external record, OR: (c) below

|

As an alternative to writing down all dice rolls, you can instead play out the game and then drop into Round Review and copy them from there or, instead, Copy Seeds + Moves To Clipboard from Round Review so you can then copy the round record to an editor or notepad. You will then have the moves list held in a text form. This works regardless of whether you chose a game at the start of play, or had waited until a suspicious play occurred. |

(c) If you have a second phone available for the test, just leave the game in the app and review it with "Round Review"

|

This is clearly the easiest option comparing the round played inside the same app but on different phones. If you have a second phone for the test, you can simply leave Round Review visible on the original phone for reference. You will then later be able to play out the test game on he other phone, while comparing move by move to check that dice rolls are the same. Again his works regardless of whether you chose a game at the start of play, or had waited until a suspicious play occurred. |

(3) Play out the reference game

If you had found a situation mid-game that you wanted to test, probably because of some suspicious play, then simply play out the rest of the game. If this is a new game you have randomly chosen for the test, then enter Options and select Auto Dice (the default). Then exit Options and select Single Player from the main menu. You should then play the level you want to test. We would recommend level 7 as that is the strongest: it would be pointless to cheat with a lower level, but then not with the strongest level 7. You have the option now to take the "Match Seed" from this menu, although you will be able to take it later. Now play out the rest of the round, recording dice throws if needed, following the instructions in a,b and c above.

(4) Find that game's Match Seed (or Round Seed to test a specific round)

After you have played out the remainder of the game above, you will need the seed for the game. The Match Seed will have been shown on the Match Settings screen at the start of match but can also be picked up later from the new Menu, Round Review option. If you are testing a single round then you need the "Round Seed". If testing a multi-round match you will need the "Match Seed".

Test The Reference Game

You have an option at this point to follow 2 tests paths, as follows:

(i) If you are testing on the same phone and have a written or exported list of dice as well as the Match Seed or Round seed, you can exit the current game. You may then choose to test in the app as-is, or if you suspect that the app might retain knowledge of the last match, you can uninstall and re-install it to clear data. Or:

(ii) If you have a second phone (perhaps belonging to a friend), you can now run the test on that phone. You can then compare the reference game via the Round Review or the exported list on the first phone. This is the easier option.

Note that running the test on a second phone is the most convincing secure option as it completely removes the test from the original game. This eliminates any possible imagined way that information might be passed between the original and test game. If this is on a friend's phone, also under a different account, then you cannot get more separate than that!

Whether on the same phone, having exited the game, re-installed the game or simply running on the second phone, you need to follow these steps:

(5) Setup the test game to use Manual Seed

You can get ready to start a new game with the seed taken from the reference game above. Tap Menu and choose Options (wrench icon on the action bar) and switch from Auto Dice / Manual Dice to Manual Seed. This button is below the board and piece set option buttons on the right of the screen.

(6) Go to Single Player and enter the seed

Choose Main Menu then Single Player. Make sure that settings such as direction of play are the same. You must now choose Set Seed and enter the seed taken from the reference game. Then tap Play to start the new match.

(7) Play out the game

Note! In this playout the dice should be the same but the moves different. If you only have one phone and have written the moves down, you can just play through comparing your notes with dice thrown in the app. If you have one phone and exported the game record to a notepad, you will probably need to use the Google task switcher to toggle between the backgammon app and the notepad, where you have the game record. If you have 2 phones then just put the phones next to each other.

You should now replay the game, trying to make different moves where possible so that the game quickly deviates from the original game. This will ensure that follow-on game positions are different from the original game, avoiding the possibility of re-using the same potential cheat roll in response to the same or similar situation in both games. This will show that the app is not inserting dice rolls that the CPU needs in certain situations: the game situation will be different but the dice rolls will still be the same.

The Proof

This test will show that the dice throws are exactly the same in both games. You can take moves back and play different moves to create different games, but all will show exactly the same dice throws for the same seed. This is conclusive proof that it does not override the random dice by substituting preferred dice throws.

Having completed this result, showing no cheating, you should also reflect that almost certainly no other Backgammon programs cheat either. The accusations of cheating are made against ALL backgammon programs, which does not make sense. From our engineering experience we understand that it is so improbable that anyone would actually cheat.

This works for both single-round and multiple-round matches. If you want to test just one round from a match, you can pick up the round seed instead of match seed to test only that round. Note that this round seed is for use with just that round. You would test that round as-if it was a single point match. If you want to test other individual rounds from the same match you should pickup the specific round seed for that round.

If you try these tests and are satisfied that indeed our program does not cheat, we would appreciate it if you could pop a review on Google Play saying so. You would help those very many vexed users out there who have not tested it but are certain it cheats.

(b) Testing for allowing the program to see upcoming dice throws

(This method is also discussed here)

(1) Select a level 7 game to test

This method only works for testing the top level 7, as all other play levels have some randomisation in the choice of play and might deviate. If level 7 does not cheat to provide a strong opponent, then there is no reason for the lower levels to cheat!

(2) Record all dice rolls and moves for both players in the game (or round)

See the previous section above "(2) Record all dice rolls in the game (or round)" for options of how to do this, following just steps (2) and (3) and the section "Test The Reference Game" from this.

You will now be on the same phone or a second phone, ready to start a new game.

(3) Setup the test game to use Manual Dice

Go to Menu, Options and tap Auto Dice / Manual Dice / Manual Seed button until it shows Manual Dice. This will allow you to directly enter dice values manually for both players. Unlike the test (a) Testing for rigging dice throws by choosing better dice during play, we here need to make sure that the game record is the same for the player and the dice, so need Manual Dice. We are testing here for the CPU to have made different moves in the same game with same dice, where it is unable to see what dice are coming.

(4) Go to Single Player, check settings are the same and tap Play

On tapping "Single Player" you will now see that there is no seed value displayed, as there is no seed. You again need to set play level 7. You now need to play out the same player moves and input the same dice from the reference game. You will be looking for any changed CPU moves. (Note the optimised suggestions below to make this test easier).

As this test removed the round seed it is impossible for the CPU to anticipate which dice rolls are coming. If you play the same moves as in the first game, the level 7 CPU will play the same moves in reply even though it was unable to see up and coming dice rolls.

This will conclusively prove that the top level 7 does not cheat by knowing what dice are to come. If level 7 does not cheat then there is no reason for the lower levels to cheat!

Readers here might like to also check this section Ways to cheat: (2) Allowing the program to see upcoming dice throws , which considers this cheating method. Although this type of cheating would deliver a much higher win rate it is also very obviously cheating as it causes the program to make obviously high risk plays that no human would make.

Optimising the Test when Running on Another Device

Again, we strongly recommend that you run the test game on a second device, probably a friend's phone. From our experience this is the easiest way to run the test and provides the maximum separation between the original game and test game. This is easier than "(a) Testing for rigging dice throws by choosing better dice during play" above as in this case you need the game record to be exactly the same. You can get Round Review on the first device to show you what each of your moves were in the original game, so you can step and copy each move one-by-one as it goes. Each time you click on the single step down arrow on the first phone Round Review display, it will show the updated board and mark the to and from squares with the green triangles. So you can very easily just copy the moves given by Round Review on the first phone and play these on the second phone. You can then compare the CPUs move on the second phone with the game move shown on the first phone. They will be the same (..if you had correctly set level 7 play in both the reference and test game.)

If you do not have access to 2 phones you still have the option to re-install the app or clear the app cache+data if you are concerned that the original game record or seed is somehow still on the device for the program to copy (but you will need to export your stats before doing that, so you can retrieve these).

If you try these tests and are satisfied that indeed our program does not cheat, we would appreciate it if you could pop a review on Google Play saying so. You would help those very many vexed users out there who have not tested it but are certain it cheats.

Other tests

You now have the flexibility to set the seed at the beginning of a game. If you're wary of any potential manipulation, you can always choose to set your own seed for each game using the "Manual Seed" option, as an alternative to Auto Dice, etc. You can then utilise the app to roll the dice for the seeds you've set. This method is simpler than Manual Dice and provides the assurance that you can retest any seed at any time. This test would confirm that a particular seed consistently yields the same dice results.

While selecting a seed is an additional task, the app will display your most recently used seed. You have the freedom to sequentially go through numbers such as 1,2,3.. or 1,3,5... or 2,3,4... etc. Alternatively, you can request ChatGPT to "provide a list of 200 random numbers between 1 and 999999", or something similar, and use these numbers. The Match settings will display your previous seed, enabling you to ensure that your next seed is different. The "Manual Seed" option puts you in total control.

Swapping the Dice with the CPU

As a follow-up test, you can also input the seed and choose to Swap Dice, so you swap sides. This will allow you to play with the dice that the CPU had and vice versa. You can then test if you would do better than the CPU given the dice it had. Additionally you can use the same seed to play against different CPU levels to see how well they each do when using the same dice rolls.

Using the Seed Setting as a Teaching Tool

Finally you can use this facility to test seeds to endlessly re-play the same game, trying out different strategies against a game where the result is already known. This is a great learning tool as you can repeatedly return to the same game to improve your technique.

Seeds that embed cheating by the Algorithmic Insertion of Doubles

Note that any attempt to cheat by just taking doubles at will would be swiftly and very easily detected through random seed testing we have provided, rendering it completely useless. That comes under (a) Rigging dice throws by choosing better dice during play covered earlier.

This section looks at other possible (and much weaker) cheating options to either use pre-selecting seeds (see below) or employ a subtle algorithmic method that infrequently and randomly substitutes a standard dice throw with a double, but only during the CPU's turn. This would act as a modified seed that could evade a seed test. The latter would not be linked to a game position, but instead would appear at unplanned points in the game. This could potentially be implemented by waiting for a specific current seed value pattern. Consequently, this becomes an event that would repeat at the same time for the same seed when reused, and therefore undetectable by a simple seed test.

This method, of course needs to insert doubles just for one side, so needs to know who the dice are thrown for. However utilising this method and then switching sides also cannot be guaranteed to be exposed by a seed test either. This is because a switch could potentially alert any cheating program to the possibility of a test being conducted, providing it with an opportunity to ensure that the doubles appear at the same point, but this time in favor of the player. We have thoroughly considered whether a generic seed test could guarantee to detect this subtle (but rather poor) cheat, but we have found no way to achieve this. However, from below, we can see that an algorithmic insertion of doubles is exposed to very profound statistical flaws.Why the Method is Poor

Despite its potential, this remains a very poor cheating method indeed. It would have to rely on providing a small number of random extra doubles, each of which would be significantly less valuable than doubles provided for specific game situations (which would be easily detected and exposed by a seed test). To gain a game advantage, you would need more doubles than if you had directly chosen when to have them.

Measuring how Effective Algorithmic Insertion of Doubles or Seed Grading might be

If you view the bar chart in section (a) The impact of 120,000 players per day, each player averages 75.12 doubles per 20 rounds, or 3.756 doubles per round. If you were to provide an extra double per round on average, the CPU might average 4.756 doubles per round, or 4.756/3.756 = 1.2624 times as many doubles. However, testing shows that the program at level 7 with this extra double would only win 54.66% of the time against a basic level 7 (after a 30,000 game test), offering a somewhat limited benefit.

If you really think about it, this should not be unexpected. If you had just one extra double per round, this is very unlikely to give you a very substantial advantage.

Moreover, the dice statistics from this weak cheating method would also somewhat expose this strategy. If you again refer to the bar chart above the point at which the CPU was strictly randomly getting 60% or more of doubles, this corresponded to just 688 players out of 120,000 simultaneous daily users (0.57% of all users).

If the CPU was receiving 1.26624 times as many doubles, it would reach 60% or more much more readily. By taking the figures from the bar chart, scaling the CPU doubles by 1.26624, and scanning up the bar chat to the point where this represents 60% of doubles for the CPU, you'll find this occurs at the row where the user has 46% and the CPU has 54% with random chance, but with the CPU getting doubles 1.26624x more often.Verifying the Maths

This can be verified by noting that 54% x 1.266620 = 68.386%, yielding a user+CPU total of 46% + 68.386% = 114.4%. As this exceeds 100%, each of these values needs to be divided by 1.144, resulting in 40.2% and 59.8%, which is the desired threshold of 40/60 we were aiming for.

If you sum all the users that saw CPU totals from 54% to 65% then, from a total of 120,000 users, 24,417 would observe the CPU getting 60% or more of the doubles. That is a huge increase from just 688! This represents 20.3% of all players instead of just 0.57%! Such a discrepancy would glaringly expose the cheat and would only offer a marginally improved win rate of 54.66%. The cheat would be blatantly obvious but the gain somewhat limited.

Again it should be easy to imagine that getting 26% more doubles would clearly expose the CPU in the longer term stats.

Conclusion

Consequently, any attempt to algorithmically fix doubles in a seed path would be an essentially futile way to cheat, as it would be absurdly obvious in the long term that the CPU was getting more doubles. In contrast the 0.57% of users that saw the CPU gaining 60%+ of doubles just by random chance would find that this spurious high 60% rate would just fade away as they played more games.

So in contrast a program that used the more powerful and attractive selection of doubles when it best suited would deliver a much higher win rate for less doubles, but our seed test would immediately detect this, so it cannot be used. The remaining weaker method, using rigged rarer algorithmic doubles, is however not plausible due to its poor performance and obvious serious statistical exposure.

Pre-Selecting Seeds

The other option in this section is the grading of seeds, so that the CPU built a list of possible seeds and then choose the seed that will deliver the best dice. As above, this has no pre-knowledge of what the player will do so can only choose on the basis of a high dice total or number of doubles. This suffers from the same problems as algorithmic inserting of doubles discussed above. If you chose the best seed you would likely be massively exposed in terms of doubles or dice total scores, which would make it very obvious it was cheating as essentially everyone would be seeing the bias to the CPU. You would instead have to select a seed that gave (say) one extra double, but this fails with the same maths above, giving 26% more doubles, resulting in over 20% of all players seeing the CPU getting 60% or more of the doubles after 20 rounds, instead of 0.57% of players seeing this. It is therefore equally useless.

Why might a game developer program their Backgammon program to cheat?

This goes right to the heart of the issue. The only reason would be that the developer was unable to easily write a program that was good enough to play without cheating. This would indeed be a very desperate step to take as this would invite bad reviews and surely badly damage the sales/downloads of the program. Their program would be doomed if everyone thought it cheated.

How difficult is it to write a reasonable Backgammon program that does not cheat?

If a developer could create a program that was good enough to play without cheating, they would surely do so to avoid damaging their reputation. This raises the question: How difficult is it to develop such a program? The answer is, "Not very difficult." Writing a plausible Backgammon program is much easier than writing a program for games like chess or checkers. These games require search lookahead and the implementation of minimax and alpha-beta algorithms, combined with a reasonable static evaluation. Even with these core algorithms, the resulting program may still be relatively weak and struggle to win any games. However, Backgammon is simple enough that a high level of play can be achieved without any search at all. There are simple sources for this program published at Berkeley that programmers can use for free. This Berkeley program plays at a level that is good enough for a releasable game product and we tested our program against it. You can read our article on this and our program development here: Tuning Experiments with Backgammon using Linear Evaluation.

Why didn't AI Factory use cheating in their product?

From our perspective, the question would be, "Why on earth would we do this crazy thing???". Cheating has no advantages and massive disadvantages. It would be absurd for us to cheat, as we have very strong internal AI development skills. We publish in prestigious AI journals, lecture on AI at universities around the world, and compete in international computer game tournaments. We have competed in Chess, Go, and Shogi computer tournaments, and most notably in computer Shogi (Japanese Chess) with our program Shotest. Shogi is a much more difficult game to program than either Chess or Backgammon. Shotest was ranked #3 in the world twice and nearly ranked #1. It also won Gold, Silver, and Bronze medals in the World Computer Olympiads in the years 2001, 2000 and 2002. It is unimaginable that we would want to cheat when we have more than enough internal AI expertise to easily and quickly develop a good program without cheating. Cheating would only result in reputation loss with absolutely no gain, and loss of users wanting to play it.

Why did AI Factory have Gaming Labs International test their program for cheating??

Even though we knew that our program did not cheat, we wanted to convince others of this fact. To do so, we needed a professional, independent third-party assessment to certify "Fair Play" that we could present to our users. Gaming Laboratories International (GLI) took our source code and confirmed that the version they built matched the version on Google Play. They then analysed the dice throws and single-stepped through the source code to determine that the program did not tamper with the dice or engage in any processing that could be linked to cheating. GLI confirmed "Fair Play." Of course, this was only for a specific version and was not repeated for every minor update we made to the program. However, the version they tested as "Fair Play" (2.241) was published on October 5, 2017, and remained in release until May 24, 2018, for a total of 7 1/2 months. This version received just as many claims of cheating as any version before or after it. Therefore, it was a completely valid proof of no cheating where people had already claimed it cheated.

Why might it seem like the program is frequently getting the throws it needs?

This is addressed in the section above, "(b) The deliberate play by the program to reach favourable positions." This is probably the most common reason that users believe the program cheats. Ironically, the better the program, the greater the impression of cheating. Users who are bothered by this might be better off searching for weaker programs. The strength of the program lies in its subtle play to achieve a very good statistical game, leaving pieces in positions with reduced vulnerability and a better chance of making progress. Users should read the section above, as the distribution of possible values that can be achieved with two dice is not as obvious as it might seem.

If a user sees a distribution of throws or doubles that seems extremely unlikely, why might this not indicate cheating?

This is addressed in the section above, "(a) The impact of 120,000 players per day" The issue is that a particular result that a user sees, when plugged into a binomial distribution, may appear to be extremely improbable. However, this overlooks the fact that about 120,000 players per day have a chance to see such an improbable distribution. Given this, hundreds of people per day would be expected to see such an outcome. It is therefore extremely unlikely that, in the entire population of users, some would not see such a distribution every day. In fact, it would be very suspicious if no one saw a skewed distribution of rolls, as normal probability would expect this to happen.

Readers should also refer to "With so many reviews accusing us of cheating, surely they must be right?" above which explains the 120,000 user issue from a different perspective.

Ways to cheat: (1) Rigging the dice throws.

The idea that our app cheats has already been debunked by the test above, which proves that our app does not cheat. However, let's take a look at how another programmer might try to cheat. One approach could be to manipulate the values of the dice thrown, such as the number of doubles thrown or giving the dice throw that hits an opponent's blot. However, this would be difficult to implement convincingly as an effective cheat, as it might be fairly obvious as it would probably skew the stats. So you would have to be careful. However it does also offer another powerful option of just swapping rolls. If you create an entire expected roll list for the round to come then when you wanted a better roll you could search the dice list for the one you need and simply swap the current one with that. This would make it undetectable, in terms of the stats, although it might group odd rolls together at the end of the game. However this would work very well and clearly deliver a much better win rate.

The flaw is that again it would be immediately exposed by the seed test above. So it would be very easy to conclusively prove our app does not do this, but a program without our seed test exposure could get away with it. However we doubt that any Backgammon programs in the app store cheat at all, so this is just an academic possibility.

Note that this method of cheating is already made impossible by the fact that dice rolls cannot be tampered with, as completely proven using the test here. The user can expose this by setting a seed and any dodgy dice throws would be exposed as they would fail to follow the consistent sequence that the seed would dictate.

Ways to cheat: (2) Allowing the program to see upcoming dice throws.

Again the idea that our app cheats this way has already been debunked by the test above. The program would however be cheating if it could see upcoming dice throws and choose its moves accordingly. This would allow the program to expose its pieces, knowing that the player would not get the dice throws needed to hit their blots. While this may seem like a more powerful way to cheat than rigging individual dice throws, it would be a very obvious cheat. It would be clear that the program was unnecessarily exposing its pieces simply because it knew the player would not get the dice needed. As a result, the program would appear to be taking absurd risks. This is therefore a poor way to cheat, as it would be very very obvious that the program was making unsound and unsafe plays that no reasonable player would make. Leaving your pieces vulnerable when they could be secured makes no play sense at all, unless you knew which dice were coming.

Note that, unlike rigging the dice, this type of cheating would be visible to all players, with no phantom defects caused by statistical chance. So sampling 120,000 simultaneous players could not cause some to see this defect by random chance. All users would be able to see this. This is not a viable way to cheat.

Can we prove a program is not cheating by counting up the total number of double and high value dice throws throughout the game?

This is a commonly used defence made of a program, to show that both the AI player and human have had their fair share of doubles and high value throws. However this does not conclusively prove that the AI is not cheating. If there is a serious imbalance outside reasonable statistical bounds, then indeed it must be, but if evenly balanced it might still theoretically be cheating. The program could be taking its doubles when it wants and taking the low throws when it suits it. However, if you can prove that the RNG has not been tampered with (as we do above), then this way of cheating is not possible.

Although this might sound a potentially very easy cheat it would actually be very hard to effectively cheat so that it significantly impacted the outcome of the game, without showing an imbalance of throws. In reality this would be very hard to control and it would actually be easier to write a program to play fairly than to cheat effectively like this.

For that reason, AI cheating is very unlikely, and in our case, not possible as it is proven that the RNG is not tampered with.

Our Backgammon product does display the distribution of dice rolls, so that the user can see that the program is not receiving better dice roll values. However this needs to consider the issues made in answer to "With so many reviews accusing us of cheating, surely they must be right" . You might see a skewed doubles count but with 120,000 simultaneous players, many will see a skew that would be extremely unlikely with a single test, but inevitable with 120,000 players simultaneously playing.

Has AI Factory really covered all avenues to affirm their product does not cheat?

Of course we know internally that the program plays fair, but unfair program behaviour might arise for a variety of possible reasons. The intention is "fair dice" but there are always possible mistakes that could result in unintentional loss of fair play. The range of steps to test for this include the following:

1. The obvious step of course is to check in the PC-based testbed that statistically the dice throws show a good distribution.

2. To check that in the testbed the RNG is delivering the same rolls outside the game engine as inside. i.e. that the seed has not been corrupted by the game engine.

3. To check that the RNG is delivering the same random numbers inside the testbed as the release APK (this is a manual check). Again checking that the APK UI has not somehow corrupted the RNG delivery.

4. Re-checking that the RNG is delivering the same random numbers inside the testbed as the release APK after different sequences of product use, such as takeback, game entry/exit, resign games and menu access. We were looking for any action of the product that might cause the RNG to stop delivering the valid sequence of random numbers compared to the raw RNG in the testbed.

5. Submission to a third party independent assessor GLI whose professional skill is to determine fair play with random numbers. They ran our RNG through a deep suite of RNG checking. They also independently built the program and verified that it had the same CRC as the Google Play version. They then scrupulously single stepped the program to affirm that the dice were not interfered with. They affirmed fair play.

6. Finally we provided access to the RNG so that users could test the program directly, to prove that it does not cheat. We have also provided this comprehensive document to help the user run tests and explain why it might appear to cheat!

Jeff Rollason - September 2023