Author: Jeff Rollason - Updated 1st September 2023

This is a return to a topic first raised in 2010 about user's perception that AI Factory's (and every other publisher's) Backgammon program cheats. Despite our efforts, this is still a persistent belief, so we return here to find better ways to explain it.

| Gaming Laboratories International tested our Backgammon Free (version 2.241 published on October 5, 2017) for fair play. You can read the results here: www.aifactory.co.uk/gli.htm | (click icon above) |

Background

Unfortunately one common feature shared by ALL Backgammon programs is that they are ALL generally accused of cheating. Even the top world-class programs that compete in human competition using real dice attract such claims (see www.bkgm.com/rgb/rgb.cgi?menu+computerdice).

Why is this? This is one of the easier games and most reasonably capable games programmers could do a reasonable job to create a viable Backgammon-playing program. Paradoxically it is harder to write a program that would convincingly cheat than to just write a simple program to just play the game. As a general guide, the better the program the more likely people will accuse it of cheating.

As the reader might imagine, we also have received a deluge of hostile reviews accusing our program of cheating, although we have suffered much less than many of our competitors who seem to have been driven to distraction trying to counter these claims. In the last 2 years (to Aug 2023) we have had 1030 claims that we cheat. That is just 0.04% of all our users, so a very small group. 99.96% do not complain. We have also had 101 users who have taken the time to post claims defending us that it does not cheat, which is nice as they have less motivation! Of course, to dispel any lingering doubts on our behalf, we have tested our app to death and had it thoroughly and professionally tested by GLI (above).

We are therefore jumping to both our defence and also to the defence of our competitors as well!

As for AI Factory's credibility, we have created AI-based games that successfully compete in international competition. Our best "Shotest" has twice reached a world ranking of number 3 in the world. It also won Gold, Silver, and Bronze medals in the World Computer Olympiads in the years 2001, 2000 and 2002. We publish in the most prestigious Artificial Intelligence journals (see www.aifactory.co.uk/AIF_Publications.htm) and have lectured on AI at Northeastern University, Imperial College London University, Queen Mary London University, Tokyo University of Technology, Curreac Hamamatsu Japan and game conferences such as the AI Summit at GDC.

The idea than we would create the relatively simpler game of Backgammon and have it cheat is insane as we would have no reason to do so because it would only damage the product for no benefit. This same applies to all the other Backgammon programs, even though almost all will have been created with a weaker AI background than us.

If we were so poor at engine writing that we could not create one without cheating, why would we? We could instead pick up one of several good free Backgammon sources, such as Gnu-backgammon and use it for free. We would not even need to write a game engine, saving ourselves a lot of effort. No, instead we created our own Backgammon engine.

If we were going to cheat surely we would cheat to help the user win to attach them to the app. If we cheat to destroy them we just annoy them and they will stop playing!

However now we are stepping back and assessing why this ludicrous situation has arisen.

This article has a look at the phenomenon.

So why are programs accused of cheating?

This divides into multiple reasons, but of course the root of much of the suspicion lies in whether the dice are fair. Part of this stems from whether they are random or not.

How good are humans at detecting whether or not numbers are random?

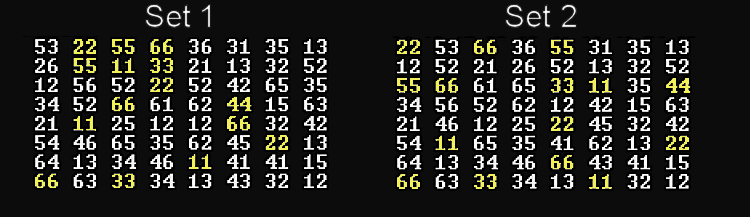

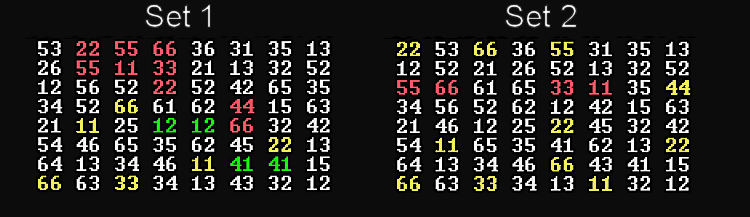

One issue in fair dice is how often doubles occur. Take a look at these two sets of numbers below (doubles are marked in yellow). One of these sets of dice rolls is random and the other is not. Can you tell which is which? When you have decided, jump down to the last section of this article and check your result.

Primary reasons for believing programs cheat:

(a) The impact of 120,000 players per day

(b) The deliberate play by the program to reach favourable positions

(c) Apophenia - Human perception of patterns

(a) The impact of 120,000 players per day

This sounds like a curious basis for proposing a reason for perceived cheating, but is very real. Our Backgammon has some 120,000 players per day. With that many people playing it is inevitable some of them will see what appear to be unfair rolls. If all these 120,000 players were playing in the same room, then any player thinking they see a bias in the rolls could talk to the people near them and quickly find no-one else saw this bias. They would then realise that it was not cheating and they had just seen unlucky rolls.

However everyone is only connected via Google Play review and players only get to see those that complain.

This effect is actually recognised by Littlewood's Law, formulated by British mathematician John Edensor Littlewood, a principle used to predict the frequency of extremely rare events. Single events may look like "miracles" but with many possible observers (or events) the event can then become highly probable.

That would obviously be a problem, but we took that one step further to actually quantify it.

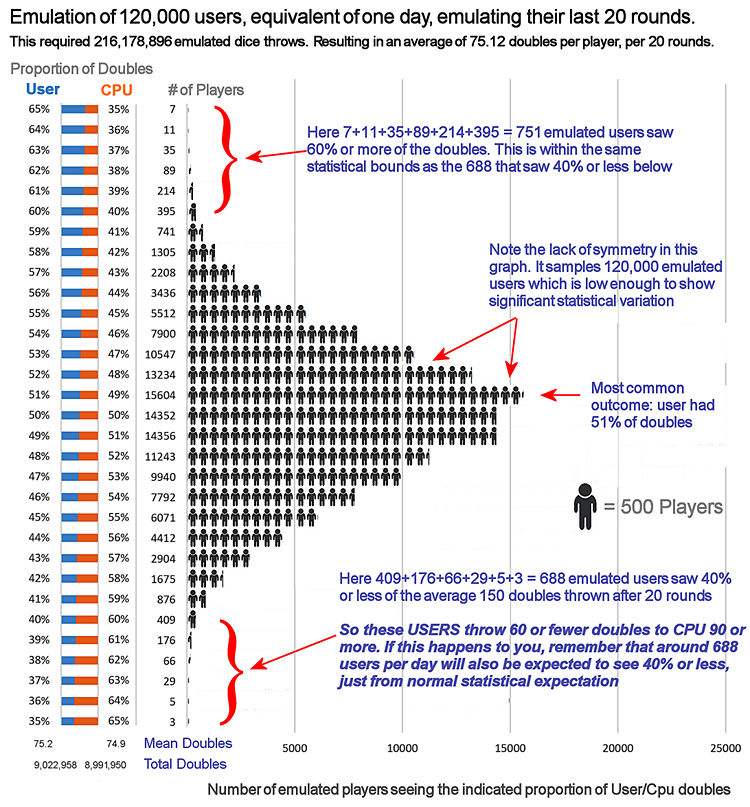

So we set up a massive test within the app to emulate a block of users playing 20 rounds of Backgammon and logging the number of doubles for the CPU and the player. This was repeated for 120,000 emulated players totalling 120,000 x 20 = 2,400,000 rounds. Each block of 20 rounds averaged 75.12 doubles rolled each. In all this required 216,178,896 dice rolls. These are the results:

What should jump out is that for 120,000 players, some 688 of them will only see 40% or worse proportion of all doubles thrown, just from expected statistical variation. There are 120,000 players per day that might write a review. The vast bulk of these will just play the game for fun and will not be counting doubles. Another huge bulk will see dice rolls that vary between slightly favourable for the player and slightly favourable for the CPU. A much smaller section will see the player getting much better rolls (they will not review), and finally that very small section that see the CPU getting much better rolls, just by statistical chance, then write a bad review saying the dice are fixed. From above we should expect 688 such players getting 40% or less of the doubles. That might look bad but 688 players will see such bad dice, however the dice rolling was fair. It was just that they got unlucky.

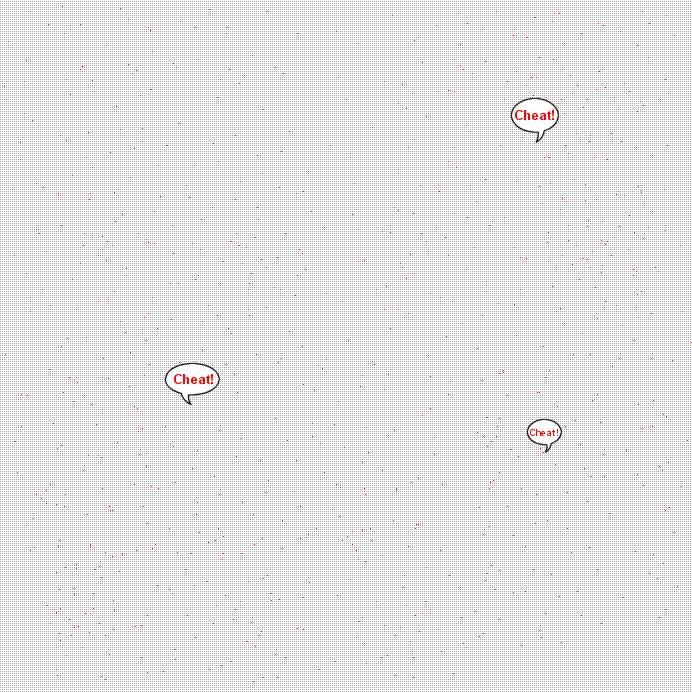

The concept may still be difficult to visualise, so we have another way of demonstrating it. The grid below represents 120,000 players playing per day, with each player represented by a grey dot. Players who would expect to receive only 40% of the last 150 doubles by random chance are marked with a red dot (688 players). Of these 688 players, 3 are marked with a "cheat" bubble. We receive about 3 accusations of cheating per day, but these are just 3 players out of the 688 who would normally expect to see such a biased skew in favor of the CPU due to normal statistical variation.

However what they see is not significant and is what they should expect to see. It is most certainly not evidence of cheating.

(b) The deliberate play by the program to reach favourable positions

This reason is much more subtle, but still real and is a key side effect of the nature of the game. Paradoxically, the stronger a program is, the greater the impression is that it might be cheating. This is not because human players are being bad losers but a statistical phenomenon. When you play a strong program you will find that you are repeatedly "unlucky" and that the program is repeatedly "lucky". You will find very often that the program gets the dice it needs to progress while you don't. This may seem like a never-ending streak of bad luck.

Note! This is not like section (a) above, where a cited single statistical result seen by one user is invalidated because 120k daily players have an opportunity to generate the same result, but most will not see it. This instead is seen by all of the 120k simultaneous daily users. Every one of the 120k players will be able to see this apparent skew.

Surely this must be cheating??

No, it is not. What is happening is that the program is making good probability decisions. It is making plays that leave its own counters in places where it not only has a high probability that follow-on throws will allow it to successfully continue, and that also obstruct the chance that its opponent will be able to continue.

The net effect is that your dice throws frequently do not do you any good whereas the program happily gets the dice throws it needs to progress.

This may be hard to visualise. After all it is not chess, where a program can exactly know what is going to happen. In Backgammon the program does not know what the future dice throws will be, so it needs to assess ALL possible throws that might happen and play its counters with the current dice so that it leaves the best average outcome for all possible future dice throws.

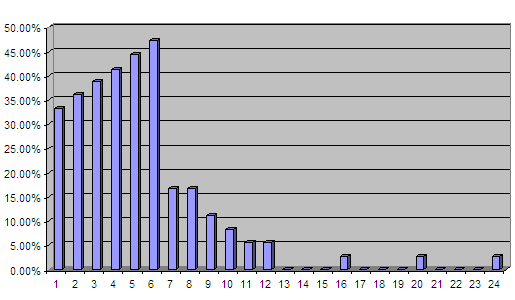

How are these distributions used?

Key to both allowing your counters to get to the squares they need, and blocking opponent plays, is the distribution of dice throws. From schooldays everyone knows that with a single dice all the numbers 1 to 6 have equal probability and that with two dice the most likely throw is a 7, with 6 or 8 next most likely, in a nice symmetrical distribution of probabilities.

However the distribution of likelihood of achieving a value with 2 dice, where you can use either a single dice or both dice, is not so immediately obvious. See below:

Here the most likely value to achieve is a 6, with 5 next most likely, but 7 is now a low likelihood. Computer Backgammon programs know this and will arrange their pieces such that they are spaced to take advantage of this distribution. A program will not want to put a pair of single counters next to each other but will instead try and put them 6 squares apart.

The overall net effect is that, on average, the program makes good probability choices and will have a better chance of good positions after the next throw, while the human opponent does not.

These good AI choices will mean that your counters are restricted with lower chances of getting dice throws that allow them to move, whereas the program's counters leave themselves on points where they have a high probability of getting a dice throw that allows them to proceed.

This looks like plain "bad luck", but is actually because of good play by your opponent.

(c) Apophenia - Human perception of patterns

The answer to the earlier test for random or non-random answer is shown below:

The Set 1 was the random set. Features of both sets are marked in colour as follows, in this priority order:

Where 2 doubles are next to each other they are coloured in red. Where 2 identical rolls are next to each other they are marked in green and finally all other doubles are marked in yellow.

Set 2 actually has exactly the same dice rolls, but has simply been put through declustering to move similar dice rolls away from each other. They have the same dice rolls, but are just ordered differently. However in searching for non-randomness the human eye most easily imagines that Set 1 is non-random as it detects more obvious patterns. But the human eye should be more suspicious of Set 2 as it fails to show patterns that randomness would produce!

There is a credible biological reason for this. Humans and probably all species are over-sensitive to patterns, giving them survival advantages. This is an understood phenomenon, "Apophenia", as follows:

Apophenia is a term used to describe the tendency or inclination of individuals to perceive patterns or connections in random or unrelated data or events. It refers to the human tendency to find meaningful patterns and significance in various stimuli, even when there may not be any actual correlation or connection.

The term "apophenia" was coined by the German psychiatrist Klaus Conrad in the early 1950s. He originally used it to describe the tendency of individuals with schizophrenia to perceive false connections and see patterns in meaningless or random stimuli.

However apophenia is not limited to individuals with mental health conditions and can be observed in the general population. It is a cognitive bias that affects how people interpret and understand the world around them. The human brain is wired to recognize patterns, and sometimes this pattern-seeking behaviour leads to the perception of connections or meanings that are not based on objective evidence.

To put this in a biological context, a caveman might approach a wood and his brain might accurately assess that there is a 10% chance that there is a tiger hiding in the wood. However the brain transmits this as a 90% chance and the danger is avoided. The caveman that receives the signal of a 10% chance then enters the wood and 10% of such individuals die. Over a period of generations the cavemen that received the exaggerated signals do not die and they become the dominant surviving group that pass on their genes.

Can we prove a program is not cheating by counting up the total number of double and high value dice throws throughout the game?

This is a commonly used defence made of a program, to show that both the AI player and human have had their fair share of doubles and high value throws. However this does not conclusively prove that the AI is not cheating. If there is a serious imbalance, then indeed it must be, but if evenly balanced it might still theoretically be cheating. The program could be taking its doubles when it wants and taking the low throws when it suits it.

Although this might sound a potentially very easy cheat it would actually be very hard to effectively cheat so that it significantly impacted the outcome of the game, without showing an imbalance of throws. In reality this would be very hard to control and it would actually be easier to write a program to play fairly than to cheat effectively like this.

For that reason, AI cheating is very unlikely.

Our Backgammon product does display the distribution of dice rolls, so that the user can see that the program is not receiving better dice roll values. Our program also allows the player to roll their own dice and input the amounts, making AI cheating impossible.

Conclusion and External References

The reality is that it is almost certain that all claims of cheating are misplaced and that the appearance of cheating is actually a consequence of so many people playing inevitably eventually creating some instances of extraordinary luck and also potentially evidence that the program is playing well, making very good probability decisions.

Do not just take our word for it. This is widely discussed in the Computer Backgammon world as follows:

# Backgammon Article: which lead to sections addressing why programs

are accused of cheating

www.bkgm.com/articles/page6.html

# Computer Dice: www.bkgm.com/rgb/rgb.cgi?menu+computerdice

(this sub-link of the above lists discussion of claims of cheating against all the most serious Backgammon programs)

# Computer Dice forum from rec.games.backgammon:

www.bkgm.com/rgb/rgb.cgi?view+546

raising the issue of claims of cheating and how to address this.

Footnotes:

A full history of the first 15 years can be read here 15 years of AI Factory.

AI Factory was incorporated in 2003. The academic foundation comes from Jeff Rollason, who lectured in Computer Science at Westfield College UOL (University of London) and then at Kings College UOL, between 1980 and 2001, the latter where he lectured in computer architecture for the second largest course in the department. He also supervised many Artificial Intelligence projects. He has lectured and presented papers at Northeastern University, Imperial College London University, Queen Mary London University, Tokyo University of Technology, Curreac Hamamatsu Japan and game conferences such as the AI Summit at GDC. This is the AI Factory list of publications. Note also AI Factory's periodical.

AI Factory's primary objective was to create and licence Artificial Intelligent (AI) game engines to game companies. Among their customers was Microsoft who took AI Factory's chess engine "Treebeard" for use in their MSN Chess. They later commissioned AI Factory to write a poker engine for their Xbox product "Full House Poker". AI Factory also created an AI simulation of a fish tank for the Japanese publisher Unbalance. In the 20 years since inception AI Factory has created many game engines and now primarily publishes to Google Play directly, but you will find their engines on Xbox, PC, Japanese mobile, Sony PSP, Symbian, Gizmondo, in-flight entertainment, Antix, Leapfrog, iOS, Android, Andy Pad and Amazon Fire

The AI from AI Factory stems from Jeff Rollason's background in AI. He had authored Chess, Go and Shogi programs that had all competed in international competitions. The most noteworthy of these was the Shogi program "Shotest" that competed in many Computer Shogi tournaments in Tokyo, Japan, where in 1998 it came 3rd from 35 competing programs and 1999 also 3rd from 40 programs, coming very close in 1998 to winning the world championship outright as in the last round only Shotest and IS Shogi could win and Shotest had already beaten AI Shogi in an earlier round. Shogi is a substantially greater AI challenge than Chess, but less than Go, because the theoretical maximum number of moves is 593 (average 80) compared to 218 (average 30) for chess. This greatly increases the game complexity. Shotest has achieved the highest World ranking for a western Shogi program, beating 3 World Champions in the year they were World Champion.

From this it can been seen that AI Factory has both a strong industrial and academic background, having created a solid base of credible AI.

The point is that if players were exposed to all other players, they would quickly realise (correctly) that the game does not cheat because almost anyone they ask will not have seen the bias they saw. Therefore, it was just chance, not cheating.FAQ for Backgammon: Your important questions answered

With so many reviews accusing us of cheating, surely they must be right?

At first glance, this may seem like a reasonable statement. It's natural to assume that if you believe something and find that many others share your belief, then you must be correct. This is what we call "common sense." However, this is actually a matter of exposure. If all 120,000 of our daily players were in the same location playing the game, you might notice some bias and mention it to the players near you, but they might not see it. As a result, you would dismiss your bad luck as insignificant.

On the other hand, if all these players were in different locations and connected to Google Play, and wrote a review accusing the game of cheating, then you would only see the reviews of people who had the same experience. These people would represent only a tiny fraction of all players. Players who don't see any bias wouldn't be motivated to go to Google Play and write a review just to say that the game is fair because they have no reason to do so. That being said, we have had about 100 people who, after seeing the cheating accusations, went to Google Play to say that the game does not cheat. We are grateful for their support!

The point is that if players were exposed to all other players, they would quickly realise (and correctly) that the game does not cheat because almost anyone they ask will not have seen the bias they saw. Therefore, it was just chance, not cheating.

This effect is actually recognised by Littlewood's Law, formulated by British mathematician John Edensor Littlewood, a principle used to predict the frequency of extremely rare events. Single events may look like "miracles" but with many possible observers (or events) the event can then become highly probable.

How are the random numbers generated in games?

In an ideal world, games would use atomic quantum events to generate random numbers. However, this is not a practical solution as it would require an online lookup from a server. The device may not always be connected to the internet, and even if it is, such a lookup could introduce delays. Instead, the program uses a standard Linear congruential generator (LCR) RNG algorithm to generate dice throws. This is a common choice for games and provides good pseudo-random numbers, making it ideal for emulating dice throws.

Why might a game developer program their Backgammon program to cheat?

This goes right to the heart of the issue. The only reason would be that the developer was unable to easily write a program that was good enough to play without cheating. This would indeed be a very desperate step to take as this would invite bad reviews and surely badly damage the sales/downloads of the program. Their program would be doomed if everyone thought it cheated.

How difficult is it to write a reasonable backgammon program that does not cheat?

If a developer could create a program that was good enough to play without cheating, they would surely do so to avoid damaging their reputation. This raises the question: How difficult is it to develop such a program? The answer is, "Not very difficult." Writing a plausible Backgammon program is much easier than writing a program for games like chess or checkers. These games require search lookahead and the implementation of minimax and alpha-beta algorithms, combined with a reasonable static evaluation. Even with these core algorithms, the resulting program may still be relatively weak and struggle to win any games. However, Backgammon is simple enough that a high level of play can be achieved without any search at all. There are free, simple sources for this program published at Berkeley that programmers can use for free. This program plays at a level that is good enough for a releasable game product. We tested our program against this one. You can read our article on this and our program development here: Tuning Experiments with Backgammon using Linear Evaluation.

Why didn't AI Factory use cheating in their product?

From our perspective, the question would be, "Why on earth would we do this crazy thing???". Cheating has no advantages and massive disadvantages. It would be absurd for us to cheat, as we have very strong internal AI development skills. We publish in prestigious AI journals, lecture on AI at universities around the world, and compete in international computer game tournaments. We have competed in Chess, Go, and Shogi computer tournaments, and most notably in computer Shogi (Japanese Chess) with our program Shotest. Shogi is a much more difficult game to program than either Chess or Backgammon. Shotest was ranked #3 in the world twice and nearly ranked #1. It also won Gold, Silver, and Bronze medals in the World Computer Olympiads in the years 2001, 2000 and 2002. It is unimaginable that we would want to cheat when we have more than enough internal AI expertise to easily and quickly develop a good program without cheating. Cheating would only result in reputation loss with absolutely no gain.

Why did AI Factory have Gaming Labs International test their program for cheating??

Even though we knew that our program did not cheat, we wanted to convince others of this fact. To do so, we needed a professional, independent third-party assessment to certify "fair play" that we could present to our users. Gaming Laboratories International (GLI) took our source code and confirmed that the version they built matched the version on Google Play. They then analyzed the dice throws and single-stepped through the source code to determine that the program did not tamper with the dice or engage in any processing that could be linked to cheating. GLI confirmed "fair play." Of course, this was only for a specific version and was not repeated for every minor update we made to the program. However, the version they tested as "Fair Play" (2.241) was published on October 5, 2017, and remained in release until May 24, 2018, for a total of 7 1/2 months. This version received just as many claims of cheating as any version before or after it. Therefore, it was a completely valid proof of no cheating where people had already claimed it cheated.

Why might it seem like the program is frequently getting the throws it needs?

This is addressed in the section above, "(b) The deliberate play by the program to reach favourable positions." This is probably the most common reason that users believe the program cheats. Ironically, the better the program, the greater the impression of cheating. Users who are bothered by this might be better off searching for weaker programs. The strength of the program lies in its subtle play to achieve a very good statistical game, leaving pieces in positions with reduced vulnerability and a better chance of making progress. Users should read the section above, as the distribution of possible values that can be achieved with two dice is not as obvious as it might seem.

If a user sees a distribution of throws or doubles that seems extremely unlikely for an individual player to experience, why might this not indicate that cheating is occurring?

This is addressed in the section above, "(a) The impact of 120,000 players per day" The issue is that a particular result that a user sees, when plugged into a binomial distribution, may appear to be extremely improbable. However, this overlooks the fact that about 120,000 players per day have a chance to see such an improbable distribution. Given this, hundreds of people per day would be expected to see such an outcome. It is therefore extremely unlikely that the entire population of users would not see such a distribution every day. In fact, it would be very suspicious if no one saw a skewed distribution of rolls, as normal probability would expect this to happen.

Ways to cheat: (1) Fixing the dice throws.

Let's take a look at how a programmer might try to cheat. One approach could be to manipulate the values of the dice thrown, such as the number of doubles thrown or giving the dice throw that hits an opponent's blot. However, this would be difficult to implement convincingly as an effective cheat, as it would be fairly obvious. If the program frequently got the dice throws it needed, the excess of luck would likely be clear. It could also impact the lifetime stats of doubles thrown, etc. A problem here is that the stats log counts items such as total dice value thrown and the number of doubles, but it does not list the frequency that a user successfully achieved a hit. This is not an obvious stat to list. Unfortunately, users tend to notice when they have been hit but not when they have escaped a hit. They tend to notice bad luck but overlook good luck. Their attention is mainly focused on lucky hits against them, which can skew their perception of fairness. However this method of cheating can be blocked by allowing the user to set the random seed. If the user can do this then it is no longer possible to change rolls as the user could set a seed and then any dodgy dice throws would be exposed as they failed to follow the consistent sequence that the seed would dictate. We are looking to add this seed set to the app.

Ways to cheat: (2) Allowing the program to see upcoming dice throws.

The program would only be cheating if it could see what throws were coming and choose its moves accordingly. This would offer the advantage of allowing the program to expose its pieces, knowing that the player would not get the dice throws needed to hit their blots. While this would be a more powerful way to cheat than fixing individual dice throws, it would be a really obvious cheat. It would be very clear that the program was unnecessarily exposing its pieces simply because it knew the player would not get the dice needed. As a result, the program would so clearly be taking absurd risks. This is therefore a very poor way to cheat, as it would be very obvious that the program was making unsound unsafe plays that no reasonable player would make.

Why not provide players with the RNG seed and future throws so they can confirm that these match the actual throws given in the game?

We considered this option a long time ago. However, for many less sophisticated players, this could lead to the conclusion that the dice are already fixed since they are known in advance. This is true of any algorithm-based random number generator. Many users might see this list of throws to come as proof of cheating, despite the fact that this is the only way such a game could generate automated dice throws. By providing such a list, many users would see it as advance proof of our cheating. Sadly, we realised that this would most likely increase claims of cheating rather than decrease them.

However a new idea would be to allow the user to set the seed so that they could log the dice rolls. If the user could also have an option to swap the first 2 dice then they could repeat the game swapping the dice sets each would receive from that seed. They could confirm that the dice rolls were consistent and measure how well the cpu managed with each dice set. This is now implemented in the updated engine and will appear in a coming release. This will allow users to prove what we already knew, that the engine does not cheat.

Has AI Factory really covered all avenues to affirm their product does not cheat?

Of course we know internally that the program plays fair, but unfair program behaviour might arise for a variety of possible reasons. The intention is "fair dice" but there are always possible mistakes that could result in unintentional loss of fair play. The range of steps includes the following:

1. The obvious steps of course is to check in the PC-based testbed that statistically the dice throws show a good distribution.

2. To check that in the testbed the RNG is delivering the same rolls outside the game engine as inside. i.e. that the seed has not been corrupted by the game engine.

3. To check that the RNG is delivering the same random numbers inside the testbed as the release APK (this is a manual check). Again checking that the APK UI has not somehow corrupted the RNG delivery.

4. Re-checking that the RNG is delivering the same random numbers inside the testbed as the release APK after different sequences of product use, such as takeback, game entry/exit, resign games and menu access. We were looking for any action of the product that might cause the RNG to stop delivering the valid sequence of random numbers compared to the raw RNG in the testbed.

5. Submission to a third party independent assessor GLI whose professional skill is to determine fair play with random numbers. They ran our RNG through a deep suite of RNG checking. They also independently build the program and verified that it had the same CRC as the Google Play version. They then scrupulously single stepped the program to affirm that the dice were not interfered with. They affirmed fair play.

Jeff Rollason - August 2023